At Bootlin we help many of our customers using Yocto/OpenEmbedded to build the Linux software stack running on their end products. While doing that we have seen all sorts of problems caused by all sorts of complicated code in their build system setup. So we wondered what we could do to improve the overall situation. Continue reading “Keep your Yocto layer simple! Introducing meta-kiss, a working reference Yocto/OE setup”

Category: Technical

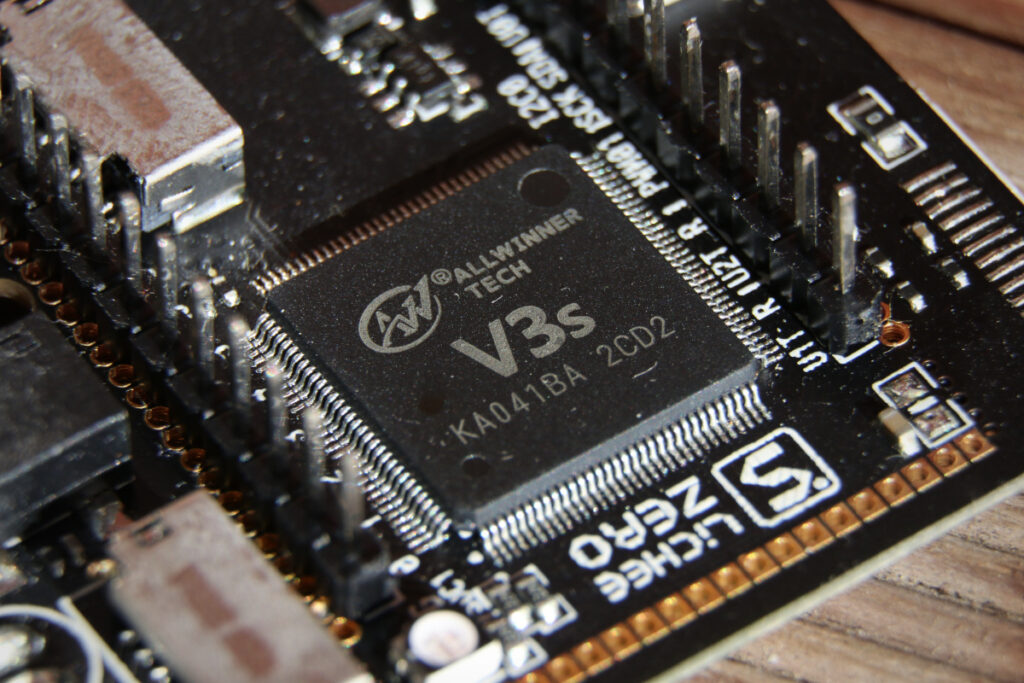

Open-source Linux kernel support for the Allwinner V3/V3s/S3 H.264 video encoder

Bootlin has been involved with improving multimedia support on Allwinner platforms in the upstream Linux kernel for many years. This includes notable contributions such as hardware-accelerated video decoding initial support for MPEG-2, H.264 and H.265 following the successful crowd-funding campaign in 2018, support for the MIPI CSI-2 camera interface and early support for the Allwinner V3/V3s/S3 Image Signal Processor (ISP) in mainline Linux.

In addition to this work focused on Allwinner platform, we have also developed and released in 2021 initial Linux kernel support for the Hantro H1 H.264 stateless video encoder, used on Rockchip processors, on top of the mainline verisilicon/hantro driver.

Today Bootlin is happy to announce the release of Linux kernel support for H.264 video encoding with the Allwinner V3/V3s/S3 platforms, in the form of a series of patches on top of the mainline Linux cedrus driver (which already supports decoding) and a dedicated userspace test tool. The code is available in the following repositories:

- GitHub: bootlin/linux (

cedrus/h264-encodingbranch) - GitHub: bootlin/v4l2-cedrus-enc-test

This work is both the result of our internal research and a continuation of earlier projects that were carried out by members of the linux-sunxi community to document and implement early proofs of concepts for H.264 encoding, covering older platforms such as the A20 and H3. We would like to give a warm thank-you for these previous contributions that made life significantly easier for us.

Adding support for H.264 encoding required bringing a significant architecture rework to the driver, which was rather messy and not very well structured (but it was of course quite difficult for us to find the most elegant way of writing the driver back in 2018, when we were just getting start on the topic). With a clear organization and the adequate abstraction in place, it became much easier to add encoding support and focus on the hardware-specific aspects.

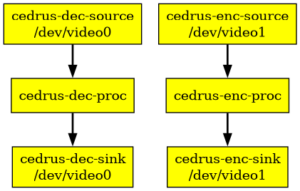

Just like decoding, encoding is based on the V4L2 M2M framework and shares the same V4L2 and M2M devices with decoding (meaning that only a single job of either decode or encode can be processed at a time). Two video device nodes are exposed to userspace, allowing as many decoding and encoding contexts as needed to exist simultaneously. Advanced H.264 codec features such as CABAC entropy coding and P frames are supported.

However this work is not yet suitable for inclusion in the mainline Linux kernel since there is no well-defined userspace API (uAPI) for exposing stateless encoders. We have started discussions to converge towards an agreeable proposal that would be both generic enough to avoid device-specific considerations in userspace while also benefiting from all the features and specificities that stateless encoders can provide. While discussions are still in progress, others have expressed interest in the same topic for the VP8 codec. Our previous work published in 2021 for the Hantro H1 H.264 stateless video encoder is also not upstream yet for the same reason: the need for a well-defined userspace API to expose stateless encoders.

Besides the introduction of a relevant new uAPI, a number of challenges remain in the path towards full support for Allwinner H.264 video encoding in mainline Linux:

- The rework of the driver needs to be submitted and merged upstream;

- Rate-control is currently not implemented and only direct QP controls are available;

- This work only covers the Allwinner V3/V3s/S3 platforms, while most other generations also feature different (yet rather similar) H.264 encoder units that could also be supported with some dedicated effort;

- Pre-processing features such as scaling and pixel format conversion are not yet support;

- Developing userspace library support (such as FFmpeg or GStreamer) to make use of the stateless encoder uAPI would be necessary;

If you are interested in funding the effort to help us advance any of these topics, feel free to get in touch with us to start the discussion!

Back from Netdev 0x17

At Bootlin, we focus on Embedded Linux development and support, and these embedded devices often have a network interface, be-it an Ethernet port, a Wireless chip or some other kind of communication channel that falls under the Linux Networking Stack’s framework.

So it’s always interesting to see what the rest of the community is working on, and meet in real life people we interact with on the netdev mailing list.

That’s why this year, Alexis Lothoré and Maxime Chevallier flew to Vancouver to participate to the Netdev Conference, a 5 days event organised by the Netdev Society, a small non-profit run by volunteers dedicated to holding this event.

Most talks at Netdev are not directly covering topics we’re actively working on, but it’s always refreshing to see these new exciting technologies that could trickle their way down to the embedded world a few years from now. It is also always pretty interesting to stay up to date about challenges encountered by other parts of the networking industry, at scales way different than the ones we are used to.

We learned for example what CXL is about, what it brings and the effort that are made to design new networking hardware around this technology to change the way we think about datacenter networking.

When we attended Netdev 0x13 in 2019, QUIC was one of the hot topics. This year, Homa was under the spotlight with talks on what it is, and how this new protocol could address some of TCP’s problems.

Like all previous editions, we learned all the progress that were made with TC and its future, new ways of bypassing the kernel stack, BPF integration in the kernel, along with XDP which continued to be more and more powerful.

Another hot topic in the kernel is the introduction of the Rust language, and the network subsystem is a pretty relevant target for the new features brought by the language. As a consequence, Rust subsystem maintainers Miguel Ojeda and Wedson Almeida Filho gave an overview of Rust benefits compared to traditional C code, and then showed a step-by-step implementation of a kernel-side TCP server module. While this example is not perfectly representative of classic network-related drivers we usually write, it was a nice showcase of current state of kernel APIs abstractions in Rust.

We also discovered the new use-case that is now driving most of the datacenter networking efforts, which is without surprise AI and Machine Learning. Turns out, if you want your ChatGPT to answer up-to-date replies without having to wait for too long, you need a powerful and well-organized datacenter for the training part, and networking engineering takes a big part in it to keep all those GPUs fed at a relevant pace.

This lead to the devmem TCP effort, which started to feel a bit familiar for us as it uses dma-buf, which we also sometimes use on multimedia pipelines. The ML and AI topic was introduced to us by the wonderful Keynote session given by Manya Ghobadi, who got all the audience captivated by how AI and ML works, what AI workloads requires in terms of network traffic scheduling, datacenter topology and computing hardware that uses optical computing.

On the final day, we even had a visit from Jakub Kicinski (one of the co-maintainers of Linux networking tree), presenting what he had been working on, and gave us an update on the netdev development statistics (and basically, his main point is that we do need to review more patches).

For the first time, there was a talk from Bootlin at netdev, as Maxime presented one of the topics he’s been working on lately : Improving multi-PHY and multi-port interfaces support. Although it was one of the only talks focusing on the low-levels aspects of the Ethernet stack, it triggered some discussions and interest from the community, which will help further improving the ongoing work.

The slides and videos of the event will be published at some point in the future, we will for sure mention this to our readers when it becomes available.

We’ll conclude this short feedback by thanking once again the Netdev Board members, organizers, speakers and the audience for this great event.

We’ll come back 🙂

Linux 6.6 released, Bootlin contributions

Linux 6.6 was released yesterday, so this is the time for our usual blog post about our contributions to this release. Before that, to get an overall idea of what went into Linux 6.6, we recommend reading the articles from LWN.net covering the Linux 6.6 merge window: part 1 and part 2. The KernelNewbies page is perhaps a little bit less rich than it used to be, but still relevant.

On our side, this time around we contributed 68 changes to this release:

- Alexandre Belloni, as the RTC subsystem maintainer, submitted a few asorted patches touching various drivers in this subsystem

- Alexis Lothoré pushed some patches extending the

rzn1-a5pswEthernet switch driver with VLAN support and port_bridge_flags support. These patches were initially written by Clément Léger but had not been accepted until now. - Hervé Codina got his audio-iio-aux driver merged, which allows the ASoC subsystem (for audio devices) to use IIO devices, such as a potentiometer. This came together with a number of fixes/improvements in the IIO subsystem. Hervé also fixed some reference counting issues in several I2C mux drivers.

- Miquèl Raynal pushed to the finish line a patch written several years ago by Bootlin engineer Kamel Bouhara, who hadn’t been accepted until now. This patch adds a sysfs interface that allows to retrieve the reset reason on Microchip ARM platforms

- Luca Ceresoli fixed some issues in two DRM panel drivers and also fixed a regression in the NVidia Tegra camera interface driver

- Miquèl Raynal did a number of different, unrelated, contributions:

- support for the EDT ET028013DMA display panel to the existing sitronix-st7789v driver, which required quite a few preparation changes

- fix a clock polarity issue in the DRM driver for the display controller used in Microchip ARM platforms

- improve many small aspects of the qcom NAND controller driver

- improve the handling of nvmem layouts in the nvmem subsystem

- fix an issue in the SJA1000 CAN controller driver that would cause the HW to stall after an overrun on some platforms

- Paul Kocialkowski contributed a few small asorted fixes in the media subsystem documentation

Here are the complete details of our contributions:

- Alexandre Belloni (5):

- Alexis Lothoré (2):

- Clément Léger (3):

- Herve Codina (17):

- ASoC: dt-bindings: Add audio-iio-aux

- ASoC: dt-bindings: simple-card: Add additional-devs subnode

- iio: inkern: Check error explicitly in iio_channel_read_max()

- iio: consumer.h: Fix raw values documentation notes

- iio: inkern: Remove the ‘unused’ variable usage in iio_channel_read_max()

- iio: inkern: Fix headers inclusion order

- minmax: Introduce {min,max}_array()

- iio: inkern: Use max_array() to get the maximum value from an array

- iio: inkern: Replace a FIXME comment by a TODO one

- iio: inkern: Add a helper to query an available minimum raw value

- ASoC: soc-dapm.h: Convert macros to return a compound literal

- ASoC: codecs: Add support for the generic IIO auxiliary devices

- ASoC: simple-card: Handle additional devices

- ASoC: fsl: fsl_qmc_audio: Fix snd_pcm_format_t values handling

- i2c: muxes: i2c-mux-pinctrl: Use of_get_i2c_adapter_by_node()

- i2c: muxes: i2c-demux-pinctrl: Use of_get_i2c_adapter_by_node()

- i2c: muxes: i2c-mux-gpmux: Use of_get_i2c_adapter_by_node()

- Kamel Bouhara (1):

- Kory Maincent (2):

- Luca Ceresoli (4):

- Miquel Raynal (31):

- dt-bindings: mtd: spi-nor: clarify the need for spi-nor compatibles

- dt-bindings: mtd: Fix nand-controller.yaml license

- of: module: Export of_device_uevent()

- gpu: host1x: Stop open-coding of_device_uevent()

- mtd: rawnand: qcom: Use the BIT() macro

- mtd: rawnand: qcom: Use u8 instead of uint8_t

- mtd: rawnand: qcom: Fix alignment with open parenthesis

- mtd: rawnand: qcom: Fix the spacing

- mtd: rawnand: qcom: Fix wrong indentation

- mtd: rawnand: qcom: Fix a typo

- mtd: rawnand: qcom: Early structure initialization

- mtd: rawnand: qcom: Fix address parsing within ->exec_op()

- dt-bindings: display: st7789v: Add the edt,et028013dma panel compatible

- dt-bindings: display: st7789v: bound the number of Rx data lines

- drm/panel: sitronix-st7789v: Use 9 bits per spi word by default

- drm/panel: sitronix-st7789v: Clarify a definition

- drm/panel: sitronix-st7789v: Add EDT ET028013DMA panel support

- drm/panel: sitronix-st7789v: Check display ID

- mtd: Clean refcounting with MTD_PARTITIONED_MASTER

- drm: atmel-hlcdc: Support inverting the pixel clock polarity

- mtd: spi-nor: Add support for sst26vf032b flash

- nvmem: core: Create all cells before adding the nvmem device

- nvmem: core: Return NULL when no nvmem layout is found

- nvmem: core: Do not open-code existing functions

- nvmem: core: Notify when a new layout is registered

- i3c: master: svc: Describe member ‘saved_regs’

- mtd: rawnand: marvell: Ensure program page operations are successful

- mtd: rawnand: arasan: Ensure program page operations are successful

- mtd: rawnand: pl353: Ensure program page operations are successful

- ASoC: soc-generic-dmaengine-pcm: Fix function name in comment

- can: sja1000: Always restart the Tx queue after an overrun

- Paul Kocialkowski (3):

Yocto Project Summit 2023.11: 2 Bootlin talks

The Yocto Project regularly organizes an-online conference called the Yocto Project Summit. The next edition, Yocto Project Summit 2023.11 will take place on November 28-30, from 12:00 to 18:00 UTC, and at just $40, attending is really affordable.

Bootlin is not only a big user of the Yocto Project, but also a significant contributor to the project, so we’re happy to announce that our two talk proposals for the Yocto Project Summit 2023.11 have been accepted. Bootlin engineers will therefore deliver the following talks:

- Keep your layer simple — and here’s how, by Luca Ceresoli, on November 29, at 14:20 UTC.

- How to Contribute to OpenEmbedded, Yocto, and Many Other Open Source Projects, by Michael Opdenacker, on November 30 at 12:45 UTC.

If you are a user of the Yocto Project, or intend to become one, we can only recommend you to attend this event. And of course, if you need training on Yocto Project, or engineering/support services, do not hesitate to contact us!

Internships at Bootlin in 2024

Bootlin is happy to announce its list of internship topics for 2024, which are open to students in engineering schools or similar, from France or the European Union. Our internship booklet is in French as most of our interns come from France.

The proposed topics are as follows:

- Drivers and hardware support in Linux or U-Boot

- Improvement of the Elixir code navigation service

- Security tracking of Linux BSP

- Implementation of a reference secure embedded Linux OS

- Addition of USB composite gadget support in U-Boot

- Improvement of IEEE 802.15.4 support in Linux

- Open topic related to embedded Linux

Bootlin is a company with a strong open-source focus: the results of the internships will be contributed to the corresponding open-source projects and will lead to the publication of blog articles or public presentations. In addition, interns will have the opportunity to work with a team of engineers with strong expertise in embedded Linux and open-source contributions.

The internships are offered for 2024, with flexible start dates, but for a minimum duration of 4 months. These internships will take place either in our offices in the Toulouse area (Colomiers) or in the Lyon area (Oullins), in order to be integrated into our team of engineers. These internships are open to all students who are citizens of the European Union.

Although several internship positions are offered, Bootlin will host a maximum of 2 interns simultaneously, in order to provide interns with high-quality supervision and guidance from Bootlin’s engineers.

For any questions or applications regarding these internship offers, please contact jobs@bootlin.com.

Bootlin at Netdev 0x17, THE Technical Conference on Linux Networking

Bootlin will be at the Netdev 0x17 conference, subtitled THE Technical Conference on Linux Networking. It is indeed one of the major event for developers working on the networking side of the Linux kernel to gather and discuss current and future topics. This year, the conference will take place from Oct 30 to Nov 3 in Vancouver, Canada.

Bootlin will be at the Netdev 0x17 conference, subtitled THE Technical Conference on Linux Networking. It is indeed one of the major event for developers working on the networking side of the Linux kernel to gather and discuss current and future topics. This year, the conference will take place from Oct 30 to Nov 3 in Vancouver, Canada.

Bootlin is involved in a number of Linux kernel networking developments: development and/or improvement of Linux kernel drivers for Ethernet MACs, Ethernet PHYs, WiFi chips, support for SFP, for Ethernet switches, for PTP offloading, for MACsec offloading, improvements to the 802.15.4 stack, and more. As such, it is very relevant for us to meet the Linux kernel networking community, present our work, and understand where things are heading to in the networking stack.

Our engineers Maxime Chevallier and Alexis Lothoré will both attend the conference. In addition, Maxime will be presenting a talk titled Improving multi-phy and multi-port interfaces:

This talk will describe current use-cases where one MAC is connected to multiple PHYs (chained, or in parallel) and multiple front-facing ports, either through multiple PHYs or through a single multi-port PHY. There exist support for some of these scenarios already, but it is limited by the fact that the PHY device is hidden behind a net_device from userspace’s point of view. We therefore can’t configure an individual PHY when multiple PHYs are present on a link (through SFP transceivers for example), and selecting which front-facing port to use is also limited. This talk will describe ongoing work to support these complex topologies, the challenges faced and expected improvements.

We look forward to attending this event in a few weeks time!

Systemd, read-only rootfs and overlay file system over /etc

Systemd is a popular init system, used to bootstrap user space and manage user processes. It now replaces several Linux utilities with its own components like log management, networking, time management, etc. There is even a bootloader component now. Systemd is obviously ubiquitous nowadays for desktop/server Linux distributions, and is also commonly used on embedded devices to benefit from features such as parallel startup of services, monitoring of services, and more.

In a recent project that uses Buildroot as its build system, we have used systemd with the storage consisting of a read-only root filesystem (SquashFS) and an overlay file system (OverlayFS) mounted on /etc. While doing this, we faced two issues with the use of OverlayFS on /etc:

- /etc/machine-id file management. This file is created during the first boot by systemd, and if the root filesystem is read-only, it will bind mount it to

/runand wait to have read-write access to create it (see more details). In that case, the machine-id file is re-generated at each boot (because/runis a tmpfs, which means that the machine identification changes at each boot, which is not necessarily desirable. On the other hand, we don’t want to machine-id file to be part of the SquashFS filesystem because the SquashFS filesystem is identical on all devices, while the/etc/machine-idfile is unique per device. So ideally, we would like this machine-id file to be stored in our OverlayFS, generated during the first boot. The issue is that reading the machine-id file is done very early by systemd, before we get the chance to mount the OverlayFS. - We wanted to be able to add or modify systemd services using the OverlayFS. Systemd parses the service files at early init and executes them according to their order and dependencies. The service mounting the filesystems from

/etc/fstaband any other services is started after such parsing, which is too late. We could think of runningdaemon-reloadfrom a custom service once mounting was complete, but this is not really a stable solution, as

Lennart Poettering commanted on in a short e-mail thread about this issue.

The solution suggested by Lennart, and elsewhere on the wider Internet is to mount the OverlayFS from an initramfs, which allows to have it setup before systemd even starts. As we use Buildroot and using an initramfs adds complexity by requiring a separate configuration to manage multiple images. This was overkill in our case, just for setting up the overlay. The solution we eventually chose was to create an init_overlay.sh script which is started as init before systemd, by adding init=/sbin/init_overlay.sh to the kernel command line:

#!/bin/sh mount -t proc -o nosuid,nodev,noexec none /proc mount -t sysfs -o nosuid,nodev,noexec none /sys mount /dev/mmcblk0p2 /mnt/data mount -t overlay overlay -o lowerdir=/etc,upperdir=/mnt/data/etc,workdir=/mnt/data/.etc-work /etc exec /sbin/init

Hopefully, this will be useful to others. Of course, we’re also curious to hear if others faced the same issue, and discover how they solved this. Let us know in the comments.

Snagboot: Designing a USB recovery process for AM335x SoCs

A few months ago, Bootlin released Snagboot, an open-source and generic replacement to the vendor-specific, sometimes proprietary, tools used to recover and reflash embedded platforms. This has led us to design recovery processes over USB for several different SoC families.

Our goal for each recovery process was the following: be able to upload U-Boot in external RAM and run it without modifying any non-volatile memories. Implementing this for many different platforms was challenging, as each vendor used different protocols, bootloader binaries, and methods to boot from recovery mode. Moreover, it was critical that the recovery tool be as user-friendly as possible, not requiring any complex configuration or vendor-specific workflows. This blog post describes the strangest recovery process we had to support so far: the one provided over USB by the Texas Instruments AM335x SoC.

Initializing AM335x platforms

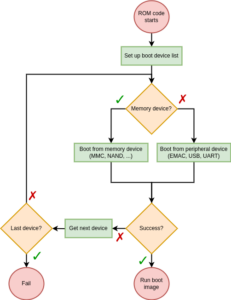

When booted, each SoC has a specific sequence of actions it performs to load and run a target operating system or bare-metal program. This sequence typically starts with a ROM code, stored in a non-volatile internal memory. The main job of a ROM code is to search for a first-stage bootloader in various external memories and load it to internal RAM. In the case of AM335x platforms, this initialization sequence is described in the TI reference manual.

As we can see, there is nothing too outlandish here. The ROM code checks each device in its boot sequence and attempts to boot from it. What is particularly interesting to us here is the Boot from peripheral device part. Indeed, our ultimate goal is to send U-Boot to the SoC over a USB connection. So we will now dig a little further into this peripheral boot feature. The reference manual states that the AM335x ROM code is capable of booting from three types of peripheral interfaces: EMAC (Ethernet), USB and UART. Considering what we said earlier, what really interests us here is the USB boot feature. The USB boot procedure is described in more detail in the reference manual. And this is where things get a little strange.

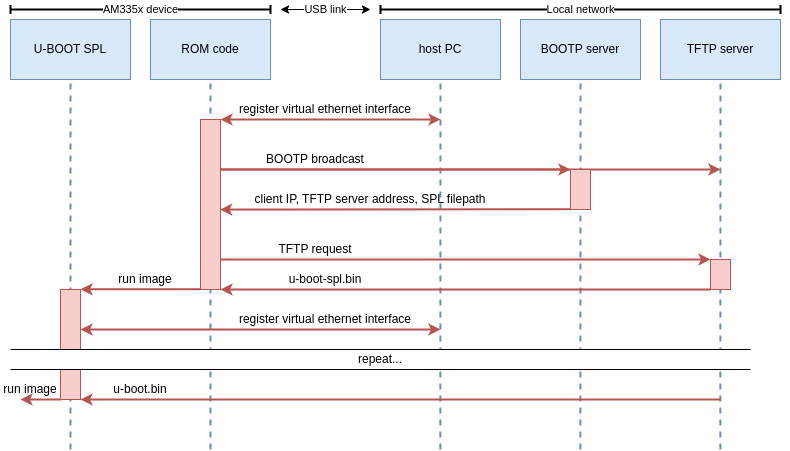

Most ROM codes we’ve encountered use fairly simple vendor protocols to communicate over USB. You’ll typically find some memory read/write operations, some run operations, and maybe a few vendor-specific commands. The AM335x ROM code however, uses network protocols to boot over USB! Specifically, the ROM code exposes an RNDIS class device which will be registered as an Ethernet interface by the host-side rndis_host driver. The ROM code will then broadcast BOOTP requests. A BOOTP server on the network should respond to this and supply the SoC with an IP address and the address of a TFTP server. Finally, the ROM code will download the first stage firmware from this TFTP server. To summarize, here is the expected USB boot procedure for AM335x SoCs:

This poses a number of issues. Remember, our goal is to boot the SoC using snagboot, a user-friendly and easily configurable CLI tool. Meaning we can’t expect the user to perform any complicated network configurations to be able to use the tool! So these are the main challenges associated with recovering AM335x SoCs:

- We need a BOOTP and TFTP server to respond to the ROM code. These servers need IP addresses, which means our tool has to obtain IPs every time it runs.

- BOOTP and TFTP servers use ports 67 and 69 which are privileged. However, we don’t want users to have to run snagboot as root.

- The ROM code requires an IP address, which means that snagboot has to supply a valid IP address to it every time it runs the recovery.

- If another BOOTP server is present on the user’s network during recovery, it could try to answer the ROM code, interfering with snagboot’s operation.

Designing a user-friendly recovery process

To circumvent these challenges, we made use of a number of nice Linux features. Firstly, we can see that the common theme in all these issues is interference with the user’s network. We have to work with local routers to get IP addresses, and we have to ensure that other BOOTP servers will not race us to respond to the board. To address this need, we’ve made use of network namespaces, which are a way of partitioning network resources on the system. When a process runs in a separate network namespace, it will not share network interfaces, routing rules, or firewall rules with the rest of the system.

This is very interesting to us, as it means that we can effectively create a sandbox environment where we can interact with the AM335x ROM code without touching the user’s local network! We can set whatever strange routing and firewall rules we want, and they will be automatically destroyed when we delete the namespace! The general sequence for our recovery process is:

- Move the ROM Code’s virtual ethernet interface to a new “snagbootnet” namespace

- Set up firewall rules to link ports 67 and 69 to unprivileged ports 9067 and 9069, which will spare us from running as root.

- Set up routing rules to assign whatever IP’s we want to the ROM interface and the servers generated by snagboot.

- Run snagrecover which will serve a U-Boot SPL image to the ROM Code

- Repeat the same process to serve a U-Boot image to SPL (SPL will use essentially the same boot process as the ROM code)

# These iptable rules will allow snagboot to use unprivileged ports 9067 and 9069 # as proxies for privileged ports 67 and 69 ip netns exec $NETNS_NAME iptables -t nat -A PREROUTING \ -p udp --dport 67 -j DNAT --to-destination :9067 ip netns exec $NETNS_NAME iptables -t nat -A PREROUTING \ -p udp --dport 69 -j DNAT --to-destination :9069 ip netns exec $NETNS_NAME iptables -t nat -A POSTROUTING \ -p udp --sport 9067 -j MASQUERADE --to-ports 67 ip netns exec $NETNS_NAME iptables -t nat -A POSTROUTING \ -p udp --sport 9069 -j MASQUERADE --to-ports 69

The network namespace and network configurations can be done by a wrapper script, that will be executed by the user before running snagboot normally. However, there is another challenging issue with this method. When U-Boot SPL runs, it will expose a new RNDIS interface which will be registered by the host system and be brought up in the default network namespace. This means that we will not be able to access SPL’s virtual ethernet interface from inside our custom network namespace! Thus, we must use one final trick to automatically move SPL’s interface inside our namespace when it is brought up. The namespace setup script will run a polling subprocess in the background. This subprocess will regularly check /sys/class/net for new interfaces matching certain USB addresses, and will automatically move them to our namespace once detected.

poll_interface () {

# check for network interfaces with device nodes matching our ROM code

# and SPL RNDIS gadget addresses

ROMNETFILE=$(grep -l "PRODUCT=$ROMUSB" $(grep -l "DEVTYPE=usb_interface" /sys/class/net/*/device/uevent))

SPLNETFILE=$(grep -l "PRODUCT=$SPLUSB" $(grep -l "DEVTYPE=usb_interface" /sys/class/net/*/device/uevent))

if [ -e "$ROMNETFILE" ]; then

config_interface "$(echo $ROMNETFILE | cut -d '/' -f 5)"

fi

if [ -e "$SPLNETFILE" ]; then

config_interface "$(echo $SPLNETFILE | cut -d '/' -f 5)"

fi

}

You can check out the full setup script by running snagrecover --am335-setup if you are interested.

With this, we have a complete recovery process for AM335! From the user’s points of view, the only big difference with other SoC recoveries is an additional helper script that needs to be run before snagrecover. Designing the AM335x support for Snagboot was a very interesting technical problem, with a solution that illustrated the flexibility offered by Linux systems.

Welcome to Romain Gantois and Louis Chauvet

We are pleased to welcome two additional engineers to our team based in Toulouse, France: Romain Gantois and Louis Chauvet.

We are pleased to welcome two additional engineers to our team based in Toulouse, France: Romain Gantois and Louis Chauvet.

Romain Gantois graduated from ISEP and completed his final internship at Bootlin during which he developed and published Snagboot, the generic and open-source board recovery and reflashing tool, and worked on an upstream Linux kernel driver for a Qualcomm Ethernet switch (patches will be submitted soon!). Following this internship, Romain is joining our team as a full-time embedded Linux and Linux engineer.

Louis Chauvet graduated from INSA Toulouse. He completed his final internship abroad, during which he worked on developing in Rust, in particular the development of Linux kernel drivers in Rust. Louis is also joining us as a full-time embedded Linux and Linux kernel engineer.

Both Romain and Louis are experienced Linux users and developers, with a solid education in low-level and embedded systems development. They will help us address more embedded Linux projects from our customers on a wide variety of topics, and are already benefiting from our training courses and the interaction with our senior engineers to quickly gain even more knowledge and experience.

Once again, welcome Romain and Louis!