Raspberry Pi Display Support and IGT

We have been working with Raspberry Pi for quite some time, especially on areas related to the display side. Our work is part of a larger ongoing effort to move away from using the VC4 firmware for display operations and use native Linux drivers instead, which interact with the hardware directly. This transition is a long process, which requires bringing the native drivers to a point where they are efficient and reliable enough to cover most use cases of Raspberry Pi users.

Continuous Integration (CI) plays an important role in that process, since it allows detecting regressions early in the development cycle. This is why we have been tasked with improving testing in IGT GPU Tools, the test suite for the DRM subsystem of the kernel (which handles display). We already presented the work we conducted for testing various pixel formats with IGT on the Raspberry Pi’s VC4 last year. Since then, we have continued the work on IGT and brought it even further.

Improving YUV and Adding Tiled Pixel Formats Support

We continued the work on pixel formats by generalizing support for YUV buffers and reworking the format conversion helpers to support most of the common YUV formats instead of a reduced number of them. This lead to numerous commits that were merged in IGT:

- igt: fb: Add subsampling parameters to the formats

- igt: fb: generic YUV convertion function

- igt: fb: Move i915 YUV buffer clearing code to a function

- igt: fb: Refactor dumb buffer allocation path

- igt: fb: Add size checks and recalculation before dumb allocation

- igt: fb: Account for all planes bpp

- igt: fb: Clear YUV dumb buffers

- igt: fb: Rework YUV i915 allocation path

In the meantime, we have also added support for testing specific tiling modes for display buffers. Tiling modes indicate that the pixel data is laid out in a different fashion than the usual line-after-line linear raster order. It provides more efficient data access to the hardware and yields better performance. They are used by the GPU (T tiling) or the VPU (SAND tiling). This required introducing a few changes to IGT as well as adding helpers for converting to the tiling modes, which was done in the following commits:

- lib/igt_fb: Add support for allocating T-tiled VC4 buffers

- lib/igt_fb: Add support for VC4 SAND tiling modes

- lib/igt_fb: Allow interpreting the tile height as a stride equivalent

- lib/igt_vc4: Add helpers for converting linear to T-tiled RGB buffers

- lib/igt_vc4: Add helpers for converting linear to SAND-tiled buffers

- lib/igt_fb: Pass the modifier to igt_fb_convert helpers

- lib/igt_fb: Support converting to VC4 modifiers in igt_fb_convert

DRM Planes Support

The display engine hardware used on the Raspberry Pi allows displaying multiple framebuffers on-screen, in addition to the primary one (where the user interface lives). This feature is especially useful to display video streams directly, without having to perform the composition step with the CPU or GPU. The display engine offers features such as colorspace conversion (for converting YUV to RGB) and scaling, which are usually quite intensive tasks. In the Linux kernel’s DRM subsystem, this ability of the display engine hardware is exposed through DRM planes.

We worked on adding support for testing DRM planes with the Chamelium board, with a fuzzing test that selects randomized attributes for the planes. Our work lead to the introduction of a new test in IGT:

Dealing with Imperfect Outputs

With the Chamelium, there are two major ways of finding out whether the captured display is correct or not:

- Comparing the captured frame’s CRC with a CRC calculated from the reference frame;

- Comparing the pixels in the captured and reference frames.

While the first method is the fastest one (because the captured frame’s CRC is calculated by the Chamelium board directly), it can only work if the framebuffer and the reference are guaranteed to be pixel-perfect. Since HDMI is a digital interface, this is generally the case. But as soon as scaling or colorspace conversion is involved, the algorithms used by the hardware do not result in the exact same pixels as performing the operation on the reference with the CPU.

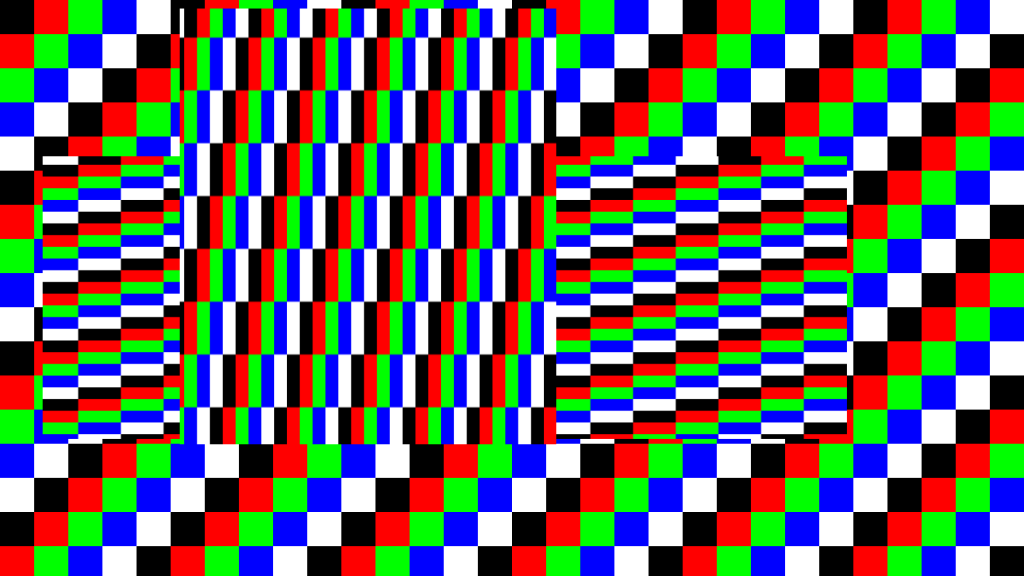

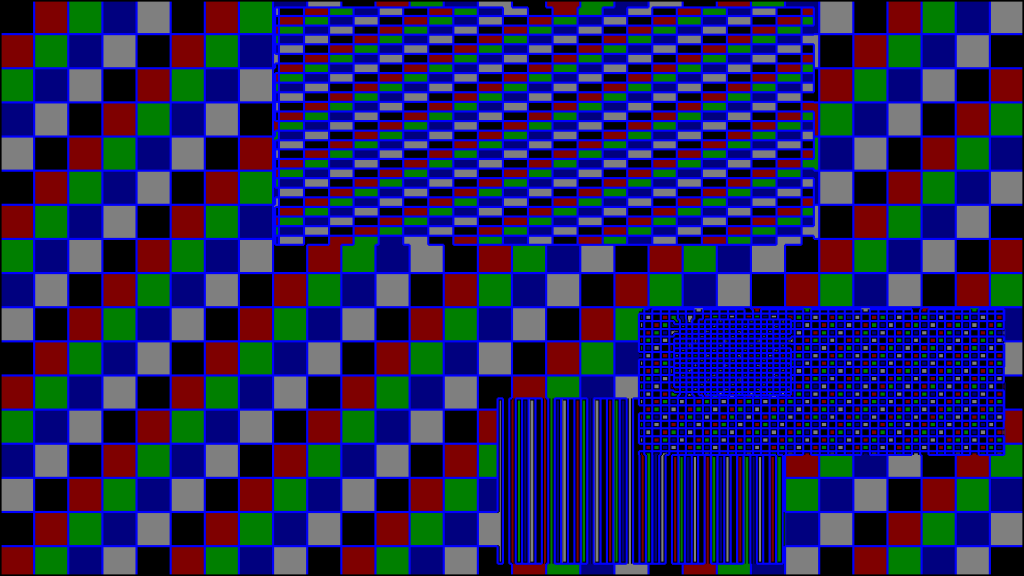

Because of this issue, we had to come up with a specific checking method that excludes areas where there are such differences. Since our display pattern resembles a colorful checkerboard with solid-filled areas, most of the differences are only noticeable at the edges of each color block. As a result, we introduced a checking method that excludes the checkerboard edges from the comparison.

This method turned out to provide good results and very few incorrect results after some tweaking. It was contributed to IGT with commit:

Underrun Detection

We also worked on implementing display pipeline underrun detection in the kernel’s VC4 DRM driver. Underruns occur when too much pixel data is provided (e.g. because of too many DRM planes enabled) and the hardware can’t keep up. In addition, a bandwidth filter was also added to reject configurations that would likely lead to an underrun. This lead to a few commits that were already merged upstream:

- drm/vc4: Report HVS underrun errors

- drm/vc4: Add a load tracker to prevent HVS underflow errors

- drm/vc4: Add a debugfs entry to disable/enable the load tracker

We prepared tests in IGT to ensure that the underruns are correctly reported, that the bandwidth protection does its job and that both are consistent. This test was submitted for review with patch:

It’s now a tradition: the

It’s now a tradition: the

At Bootlin, we owe a lot to the Free Software community, and we’re doing our best to give back

At Bootlin, we owe a lot to the Free Software community, and we’re doing our best to give back