Just one day before the end of May, Buildroot 2010.05 has been released by Peter Korsgaard, as predicted by the fixed release schedule used by the project. It can be downloaded at http://buildroot.org/downloads/buildroot-2010.05.tar.bz2. For the record, Buildroot is a simple and efficient tool to build embedded Linux systems: cross-compiling toolchain, root filesystem, kernel image and bootloader.

Just one day before the end of May, Buildroot 2010.05 has been released by Peter Korsgaard, as predicted by the fixed release schedule used by the project. It can be downloaded at http://buildroot.org/downloads/buildroot-2010.05.tar.bz2. For the record, Buildroot is a simple and efficient tool to build embedded Linux systems: cross-compiling toolchain, root filesystem, kernel image and bootloader.

Major changes

The major user-visible changes are:

- Re-organization of the menuconfig layout for packages. All packages are now organized in categories, making them easier to find

- Our X.org package set has been upgraded to X.org 7.5.

- Several new packages have been added: cdrkit, cramfs, genext2fs, genromfs, libatomic_ops, librsync, libusb-compat, lmbench, netperf, squashfs, squashfs3, squid. Many of them have been added as the result of a filesystem code generation cleanup

- On the internal toolchain side (i.e toolchains generated by Buildroot), we have added support for uClibc 0.9.31, GCC 4.4.4, GDB 7.x and binutils 2.20.1.

- On the external toolchain side (i.e. re-using existing toolchains), we have improved support for multilib toolchains (such as CodeSourcery toolchains)

In addition to these changes, 41 bugs of our bug tracker have been fixed, and dozens of packages have been upgraded or fixed.

Bootlin contributions

Bootlin has again made significant contributions to this release:

git shortlog -s -n 2010.02..

224 Paulius Zaleckas

182 Thomas Petazzoni, from Bootlin

148 Peter Korsgaard

28 Gustavo Zacarias

26 Will Wagner

14 Lionel Landwerlin

6 Yann E. MORIN

[...]

The things we have contributed include:

- A big cleanup in the Buildroot code that generates the root filesystem images. It has been moved from various directories in

target/to a single, central location:fs/. The code that handled the compilation of host utilities to generate the filesystem images (genext2fs, cdrkit, mtd-utils, cramfs, squashfs, etc.) has been moved to normal packages, and an infrastructure has been added to factorize the common code of the various filesystem generation makefiles. - Better support for multilib external toolchains

- A new script that generates nice dependency graph (see below)

- A clarification of the gettext integration, to make it work properly with glibc toolchains.

- Fixes to bug #75, bug #1789.

- Dozens of build fixes found by testing random configurations.

- Various code cleanups, that lead to the removal of several configuration options, which makes the usage of Buildroot a little bit easier.

Dependency graph generation

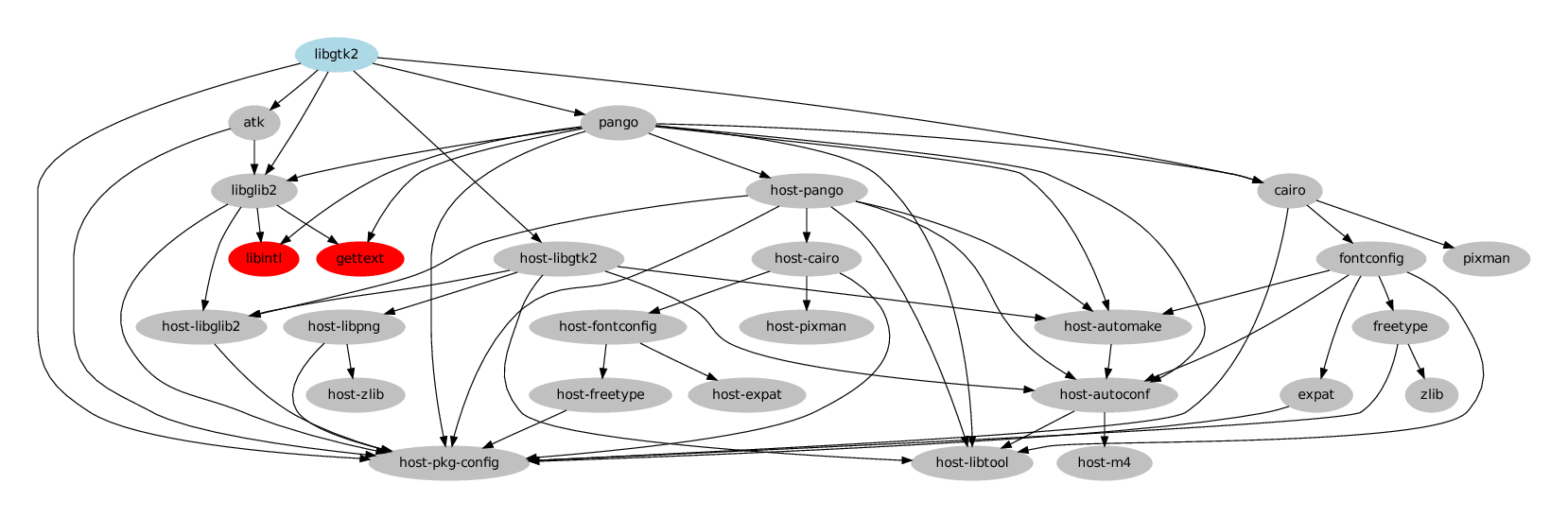

Thanks to the new package infrastructure that we have included in Buildroot a few releases ago, it is now easier to retrieve the list of dependencies of each package in a generic way. Using this, I recently implemented a dependency graph generation tool. It allows to generate nice graphs of the dependencies for a given package, like libgtk2 in the following example (click for the full sized version):

Note that packages in red are packages that do not use the generic or autotools infrastructure, so we couldn’t determine what their dependencies are.

We can also generate the dependency graph for a complete Buildroot configuration, with all packages:

Using this tool is fairly easy. You must first install the graphviz package on your distribution. For a single package dependency graph:

./scripts/graph-depends libgtk2 > libgtk2.dot dot -Tpdf libgtk2.dot -o libgtk2.pdf

For a full dependency graph:

./scripts/graph-depends > full.dot dot -Tpdf full.dot -o full.pdf

Note that the dependency graph always depends on the selected set of packages. It is not the absolute dependency graph, which would contain all existing dependencies. It only show the dependencies as they are in your current configuration.

Plans for the next release

The next release is scheduled for August (2010.08). I have in mind various things:

- Cleanup of the bootloader compilation code and integration of support for Barebox. This is already implemented in one of my branch, so I should be able to push this fairly soon to Buildroot

- Cleanup of the Linux kernel compilation code, with a much-wanted simplification of this. Again, this is already implemented on my side, but requires a little bit more work before being able to push this

- Continue the effort to convert packages to the generic or autotools infrastructure. I have already sent a status update on this topic to the project mailing-list. We have 56 packages to convert to the generic infrastructure and 77 to convert to the autotools infrastructure.

- Integration with Crosstool-NG, which is a job that Yann Morin, developer of Crosstool-NG has already started.

- More improvements of the external toolchain integration

- If some time is left, a cleanup and reorganization of the board support mechanism, so that we can add more boards in a sane way

No

No  While the American Embedded Linux Conference always takes place in the San Francisco area in California, the European Embedded Linux Conference Europe travels each year in a different country across Europe: it took place in Austria in 2007, in the Netherlands in 2008 and in France in 2009. The location for the

While the American Embedded Linux Conference always takes place in the San Francisco area in California, the European Embedded Linux Conference Europe travels each year in a different country across Europe: it took place in Austria in 2007, in the Netherlands in 2008 and in France in 2009. The location for the  Bootlin has contributed to this release, by adding some support for the

Bootlin has contributed to this release, by adding some support for the  Just a few weeks before the next edition of the

Just a few weeks before the next edition of the

The

The  The usual tracing-related talks, with Using the LTTng tracer for system-wide performance analysis and debugging by Mathieu Desnoyers and Ftrace – embedded edition, by Steven Rostedt. A talk on debugging Linux toolchain overview with advanced debugging and tracing features, by Dominique Toupin.

The usual tracing-related talks, with Using the LTTng tracer for system-wide performance analysis and debugging by Mathieu Desnoyers and Ftrace – embedded edition, by Steven Rostedt. A talk on debugging Linux toolchain overview with advanced debugging and tracing features, by Dominique Toupin. Linux 2.6.33 was out on Feb. 24, 2010, and to incite you to try this new kernel in your embedded Linux products, here are features you could be interested in.

Linux 2.6.33 was out on Feb. 24, 2010, and to incite you to try this new kernel in your embedded Linux products, here are features you could be interested in.