The end of 2018 is approaching, which is the right time to provide an update on where we stand with the Allwinner VPU support in Linux. The promise of our Kickstarter campaign was to deliver all funded stretch goals by the end of 2018. As we’ll explain below, all the goals have been met, and only the upstreaming process is not completely finished for some features.

With the Linux 4.20 release happening just a few days ago, the core of the Cedrus driver and the media Requests API that supports it are finally available through Linus Torvalds’ tree! They provide the required interface for hardware-accelerated MPEG-2 decoding on the A13, A20, A33 and H3 Allwinner SoCs.

Cedrus driver in staging

Discussions regarding the details of the stateless video decoding interface offered by the V4L2 API are still taking place, which explains why our driver was integrated as a staging media driver. It was also decided to hide the associated interface for MPEG-2 decoding from the public kernel headers for now. This way, the interface is not considered stable and can keep evolving as discussions are still taking place. Because the kernel has a policy of never breaking backward compatibility, these interfaces will hardly evolve once they are declared stable, so it is crucial to ensure they are ready when that happens. This is especially true given the level of complexity associated with video decoding and the various pitfalls paving that road.

Status of H264 and H265 decoding support

The code for H264 and H265 decoding is already developed and functional, has already been submitted for upstream inclusion but it hasn’t been merged yet. However, with the decoding interfaces considered unstable for now, it should become easier to bring-in H.264 and H.265 decoding support to mainline. Support for these two codecs in the API and our driver is still under review and we will continue sending new iterations for them, with the suggested changes and fixes as well as adaptations for the still-evolving decoding interface. More specifically, our series need to be updated to the latest proposal for identifying reference frames, which is now based on the timestamps of the buffers.

Platform support: H5, A64 and A10 on the horizon

Regarding the platforms supported by our driver, the patches for H5 and A64 support were accepted since our last update and they will make it to Linux 4.21! We are also looking to send out support for the A10, which was missing from our previous series (but shouldn’t cause any particular trouble).

DRM driver improvements

The adaptations for the DRM driver allowing to display the raw decoded frames from the VPU on older platforms were also finalized, with the first half of the series already queued for 4.21. Subsequent changes in the series are still waiting for more reviews as they affect common parts of the framework.

Conclusion

Overall, we are slowly closing down on this project and hoping to get the remaining pieces of our work integrated in mainline Linux as soon as possible, as there seems to be very few obstacles left in the way.

Back in August 2017, we wrote in a

Back in August 2017, we wrote in a

The 2018.11 release of

The 2018.11 release of

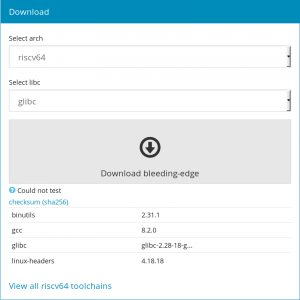

We have just published an updated version of the cross-compilation toolchains available at

We have just published an updated version of the cross-compilation toolchains available at