Using Xen on Ubuntu 6.10 (Edgy), using RAID storage.

License

Copyright 2006, Bootlin.

This mini-howto is released under the terms of the Creative Commons Attribution-ShareAlike 2.5 license.

Credits

Thanks to:

- Sébastien Chaumat, for making me feel like using Xen.

- Eric-Olivier Lamey, for sending feedback and making useful suggestions,

Introduction

In this document, we share our experience using Xen on Ubuntu 6.10 (Edgy), using RAID storage.

Ubuntu 6.10 was used because it was the first Ubuntu version with Xen support. In earlier Ubuntu versions (in particular 6.06 LTS), you have to install Xen from sources, and do manual C library tweaks (for TLS support issues). The advantage of packages is that you can easily know about and deploy security updates!

Another reason for using version 6.10 is that it uses the Linux kernel version supported by the latest Xen version (3.0.3 when we installed it). With Ubuntu 6.06, we would have needed to upgrade the kernel version, or to use an earlier Xen version.

Kernel configuration files are provided for the Via C7 based Dedibox servers available in France. Of course, these instructions should be useful for anyone trying to use Xen, whatever the server hardware, and even if RAID storage is not used.

We wanted to share our experience because we spent a significant amount of time looking for correct kernel configuration settings, bootloader settings (in particular for RAID), as well as Xen network and tuning settings. We hope that this document will save some of your time, in particular if you have a Dedibox server!

Note that this HOWTO may not given enough details for unexperienced system administrators, who are unlikely to fiddle with Xen and RAID anyway.

Ubuntu 6.10 installation

For Dedibox users, we chose the below partition settings in the Dedibox installation interface:

- 1st partition:

/boot, 256 MB, RAID1, ext3 - 2nd partition:

/, 4096 MB, RAID1, ext3 - 3rd partition:

/xen, 146225 MB, RAID1, ext3 - 4th partition: Linux swap, 2048 MB (2 separate partitions on sda and sdb)

Note that when using Xen, the swap space will only be used by Domain0, which is not supposed to run any services, except a ssh server. The 2048 MB maximum size is definitely much more than needed. 512 MB may be more than enough. We kept 2048 MB in case we decide to stop using Xen and run all our services on a single, real server.

By default, the Dedibox or Edgy install only took the first partition into account. However, Linux fully supports several swap partitions (max 2GB per partition). If these partitions are on different disks as in our case, this is even better for performance, as Linux can access those 2 partitions in parallel.

To make the second swap partition work, we added it to /etc/fstab file, ran mkswap /dev/sdb4 and then rebooted to check that everything was correctly set up.

For Dedibox users, note that the server doesn’t seem to boot if you do not choose a separate boot partition.

Adding packages

Uncomment all universe lines in /etc/apt/sources.list.

Install the packages we are going to need in the next sections:

apt-get update apt-get install xen-hypervisor-3.0-i386 xen-source-2.6.17 xen-tools xen-utils-3.0 libc6-xen apt-get install build-essential libncurses5-dev ccache

Kernel compiling

Of course, you may choose to use a generic Linux kernel provided by Ubuntu (such as xen-image-xen0-2.6.17-6-server-xen0). Follow the below instructions if you want to tune your kernel according to your exact hardware.

cd /usr/src tar jxf xen-source-2.6.17.tar.bz2 cd xen-source

To speed up recompiling, you can add ccache support in the kernel makefile. Change the lines defining CC and CROSS_COMPILE:

HOSTCC = ccache gcc CC = ccache $(CROSS_COMPILE)gcc

Dedibox users can use our custom configuration file. We derived it from the Ubuntu Xen kernel and used the settings used in the official Dedibox kernel configurations. You may still check that you have all the features you need, as we removed the features we do not use at the moment (such as NFS, ReiserFS, FAT…).

Compile your kernel:

make make install make modules_install

Bootloader configuration

The Grub bootloader configuration file needed to be updated to be able to load the Xen hypervisor kernel. Here’s what we added to our /boot/grub/menu.lst file before the ## ## End Default Options ## line:

title XEN/2.6.17-bootlin root (hd0,0) kernel /xen-3.0-i386.gz module /vmlinuz-2.6.17.11-ubuntu1-xen0-dedibox-bootlin1 root=/dev/md1 md=1,/dev/sda2,/dev/sdb2 ro quiet splash

You can see that Grub loads files from the first raw partition (not using RAID), while Linux directly boots from the RAID device. In this case, files paths are taken from the /boot partition. You will have to adjust file patches in case /boot is part of the / partition.

Note that there is no clear documentation on the minimum of memory needed for dom0, the privileged Xen domain, from which you are going to control the standard Xen domains. We found that many sites use 128 MB, but other ones seem to be working fine with 64 MB. Just try by yourself if physical RAM is scarse!

Testing dom0

You are now ready to test dom0!

Just reboot and hope that your new kernel boots well. In case you administrate a remote server and this doesn’t work, debugging is tricky (believe us!), because you have no access to the system console. If this happens to you, we advise you to start from a working configuration, like ours or the default Ubuntu kernel, and apply your changes little by little.

Once you access a working shell, you can run top and check that you are no longer running on your regular server. In particular, you will only see the amount of RAM that you attributed to dom0 in the Grub configuration file. You can also run uname -r to check that you are running your new kernel, and xm info to get more information about the Xen hypervizor running on your machine.

Configuring regular Xen domains

To configure networking between dom0 and regular domains, we decided to use regular routing and NAT. Xen uses bridging by default, but we are less familiar with this method.

Set this in the /etc/xen/xend-config.sxp file, by making sure that bridge settings are commented out, and by uncommenting the below 2 lines:

(network-script network-nat) (vif-script vif-nat)

We also commented out domain migration settings, as we are not using them (yet).

Creating a new domU domain

Create a 1 GB (for example) sparse file for dom1 system files and format it:

dd if=/dev/zero of=/xen/dom1.img bs=1024k seek=1024 count=0 mkfs.ext3 -F dom1.img

Sparse files are particular files containing holes filled with zeros. No space is used on real storage until the empty blocks are written to. In a few words, they just use the size of their contents.

Create a swap partition image file:

dd if=/dev/zero of=dom1-swap.img bs=128M count=1 mkswap dom1-swap.img

We also created a special 8 MB filesystem for data files for dom1 (not belonging to the operating system):

dd if=/dev/zero of=/xen/dom1-data.img bs=1024k seek=8192 count=0 mkfs.ext3 -F dom1.img

Populate the root filesystem for dom1:

mkdir /mnt/dom1 mount -o loop dom1.img /mnt/dom1 debootstrap edgy /mnt/dom1

Copy the kernel modules too:

rsync -a /lib/modules/2.6.17.11-ubuntu1-xen0-dedibox-bootlin1/ /mnt/dom1/lib/modules/2.6.17.11-ubuntu1-xen0-dedibox-bootlin1/

Copy the /etc/apt/sources.list and /etc/resolv.conf files too.

Configuring domU

Declare the new domain in a /etc/xen/dom1.cfg file:

kernel = "/boot/vmlinuz-2.6.17.11-ubuntu1-xen0-dedibox-bootlin1" memory = 96 name = "dom1" vcpus = 1 disk = [ 'file:/xen/dom1.img,ioemu:hda1,w','file:/xen/dom1-data.img,ioemu:hda2,w','file:/xen/dom1-swap.img,ioemu:hda3,w' ] root = "/dev/hda1 ro" vif = [ 'ip=10.0.0.1' ] dhcp = "off" hostname = "dom1.bootlin.com" ip = "10.0.0.1" netmask = "255.0.0.0" gateway = "10.0.0.254"

Of course, replace dom1 by a meaningful name!

Here, you can see that we assign 10.0.0.x to domx domains.

You can also see that we are using the same kernel as the one we use for dom0. This is not required at all, and we will soon propose a slightly lighter domU kernel without things which are not needed in unprivileged domains (no RAID, no netfilter…).

Booting and configuring domU

Start the new virtual machine and access a console:

xm create /etc/xen/dom1.cfg xm console dom1

We gave you both instructions, as the second one is useful to access the console of an already running domain. However, you can create a domain and access its console in a single command, using the -c option:

xm create -c /etc/xen/dom1.cfg

You are connected to your new virtual system, with minimum Ubuntu server packages. There are still a few things to adjust though:

Set the root password, otherwise there is no password!

passwd

Fill up the /etc/fstab file as follows:

# /etc/fstab: static file system information. # #/dev/hda1 / ext3 defaults,errors=remount-ro 0 1 /dev/hda2 /data ext3 defaults 0 2 /dev/hda3 none swap sw 0 0

Fill up the /etc/network/interfaces file as follows:

# Used by ifup(8) and ifdown(8). See the interfaces(5) manpage or

# /usr/share/doc/ifupdown/examples for more information.

# The loopback network interface

auto lo

iface lo inet loopback

auto eth0

iface eth0 inet static

address 10.0.0.1

netmask 255.0.0.0

broadcast 10.0.0.255

gateway 10.0.0.128

Edit the /etc/hosts file as follows:

127.0.0.1 localhost localhost.localdomain 127.0.0.1 dom1

Fill up the /etc/hostname file as follows:

dom1

Exit the dom1 console by typing exit followed by Ctrl ], and reboot dom1:

xm reboot dom1

Back to the dom1 console, check networking with dom0 and with the outside world:

ping 10.0.0.128 ping bootlin.com

Install extra packages:

apt-get install libc6-xen deborphan psutils wget rsync openssh-client

Remove packages we will not need in the Ubuntu distribution:

apt-get remove --purge wireless-tools wpasupplicant pcmciautils libusb-0.1-4 alsa-base alsa-utils dhcp3-common dmidecode linux-sound-base x11-common eject libconsole aptitude groff-base

Keep your current configuration as a reference starting point for other domU domains you will create:

cp dom1.img domu.img.ref

Now we are going to create NAT (Network Address Translation) rules to forward incoming Internet packets to the right server on your virtual local network. Write your own rules in /etc/network/if-up.d/iptables in dom0 (make sure you make this file executable!). Here’s an example for an http server and a BitTorrent seed server.

#!/bin/sh ### Port Forwarding ### iptables -A PREROUTING -t nat -p tcp -i eth0 --dport 80 -j DNAT --to 10.0.0.1 iptables -A PREROUTING -t nat -p tcp -i eth0 --dport 6881:6889 -j DNAT --to 10.0.0.2

Now, make sure your domain is started when your server is started, by adding a link in /etc/xen/auto/:

cd /etc/xen/auto/ ln -s ../dom1.cfg .

Ubuntu Edgy fixes

tty fixes

If you are using only xm console to connect to dom1, and do not plan to use ssh, you will notice that there are 5 getty processes running all the time waiting for a terminal connection on /dev/tty2 to /dev/tty6, while you just use /dev/tty0.

This does not only waste some CPU cycles and a few MB of RAM, but these getty processes keep failing and get respawned by the upstart init process. This causes a log of writes to the /var/log/daemon.log file, which could eventually fill up your root filesystem.

Here’s a quick fix for this:

rm /etc/event.d/tty2 rm /etc/event.d/tty3 rm /etc/event.d/tty4 rm /etc/event.d/tty5 rm /etc/event.d/tty6

Note that you may need to run this again each time the initscripts package is updated.

Configuring CPU sharing

With Xen, it’s not only possible to set the amount of physical RAM used by each domain. You can also set kind of CPU priorities for more critical domains needing fast response times.

Of course, you could also set the vcpus setting to values greater than one, but this has several drawbacks:

- You would need an SMP enabled kernel.

- This method also allows discrete settings, and you would need quite big numbers of vcpus to have a 45% / 65% cpu share, for example.

- No easy way to add more CPU power to a domain without rebooting it (you may try Linux CPU hotplugging capa bilities, though).

Fortunately, the "xm sched-credit" command lets you change the weight and cap settings of each domain. See the Credit based CPU scheduler page for details about this command.

What’s good is that you can change those settings on the fly, according to actual server loads, without having to restart the corresponding domain. Unfortunately, Xen 3.0.3 doesn’t let you configure initial weight and cap settings at domain creation time through the domain configuration file (such a feature should be available in Xen 3.0.4, as a patch has been committed in the development version). Until such a feature is available, here’s an example implementation:

Create a /etc/init.d/xen-sched-credits file with execute permissions:

#!/bin/sh # Sets initial weight and cap for xen domains # In xen 3.0.4, this should be possible # to set these values in the domain configuration files # dom1 domain xm sched-credit -d dom1 -w 64 xm sched-credit -d dom1 -c 25 # dom2 domain xm sched-credit -d dom2 -w 256 xm sched-credit -d dom2 -c 50

Add this file to init runlevel 2 in dom0:

cd /etc/rc2.d/ ln -s ../init.d/xen-sched-credits S99xen-sched-credits

Useful tips

Making changes in a domain

What’s good with Xen as opposed to a real server is that you don’t need the domain to be running to make changes, even to upgrade packages!

mkdir /mnt/dom1 mount -o loop /xen/dom1.img /mnt/dom1 chroot /mnt/dom1

Further tasks

Congratulations! You are now ready to create more domains, and fine tune Xen according to your exact requirements.

Have fun! We hope that this HOWTO saved some of your time, anticipated some of your questions, and hopefully made you feel like sharing what you learn on your turn too.

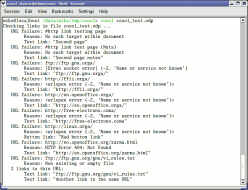

Useful links

On-line resources that we used to configure Xen:

- Wikipedia’s article on Xen.

- Xen documentation

- Xen from Ubuntu community documentation.

- How To Set Up Xen 3.0 From Binaries In Ubuntu 6.06 LTS (Dapper Drake) (Howto forge, from Ásgeir Bjarni Ingvarsson).

- The Perfect Xen 3.0.1 Setup For Debian (Howto forge, from Falko Timme).

.

.