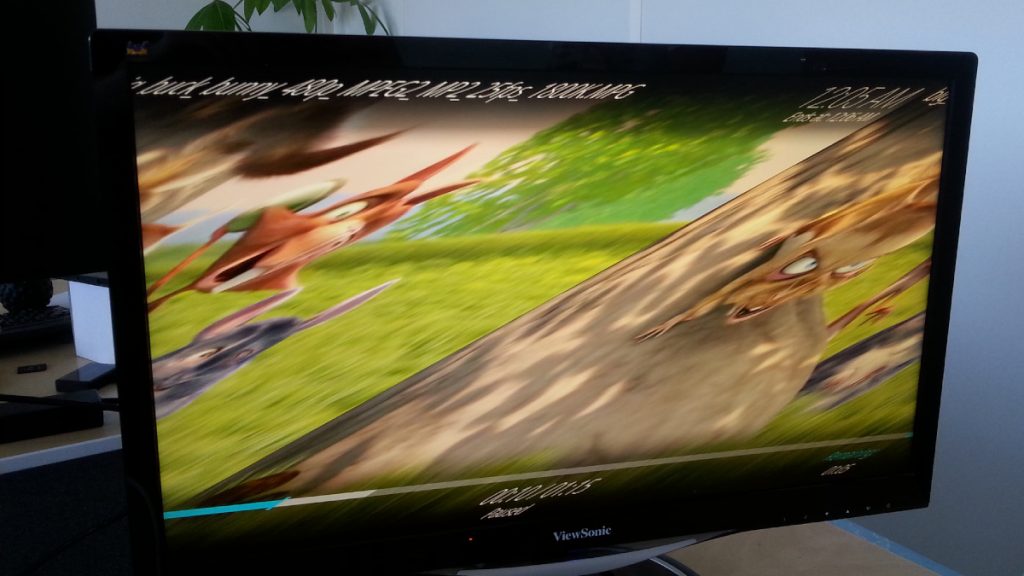

This week was the occasion to send out version 5 of the Sunxi-Cedrus VPU driver, that uses version 16 of the media requests API. The API contains the necessary internal plumbing for tying specific metadata (exposed as v4l2 controls, that are structures of data set by userspace) about the current video frame to decode with the associated source buffer (that is extracted in slices from the raw video bitstream and contains the frame’s encoded data). Adding this feature to the Linux kernel paves the way for supporting stateless VPUs such as Allwinner’s Video Engine, that are found in various ARM platforms. With version 16, a number of reliability issues were fixed and we were able to run decoding tests for hours without hitting any error!

This new version of our driver contains several improvements, that are presented in the cover letter of the series. Most notably, it brings support for the H3 (which uses the second revision of the Allwinner’s Display Engine hardware block) and exposes linear YUV output in addition to the tiled output format. The issue related to H264 decoding failing because of the luma and chroma planes being too distant in memory was fixed by allocating contiguous buffers for the destination frames. However, this required significant changes in our display pipeline, which was the occasion to rework both cedrus-frame-test and libva-cedrus to handle various scenarios for buffer and planes matching and avoid hardcoded values that are specific to our pipeline. This opens the way to making these tools generic users of the V4L2 and DRM APIs, without any particular tie to our specific platform and setup.

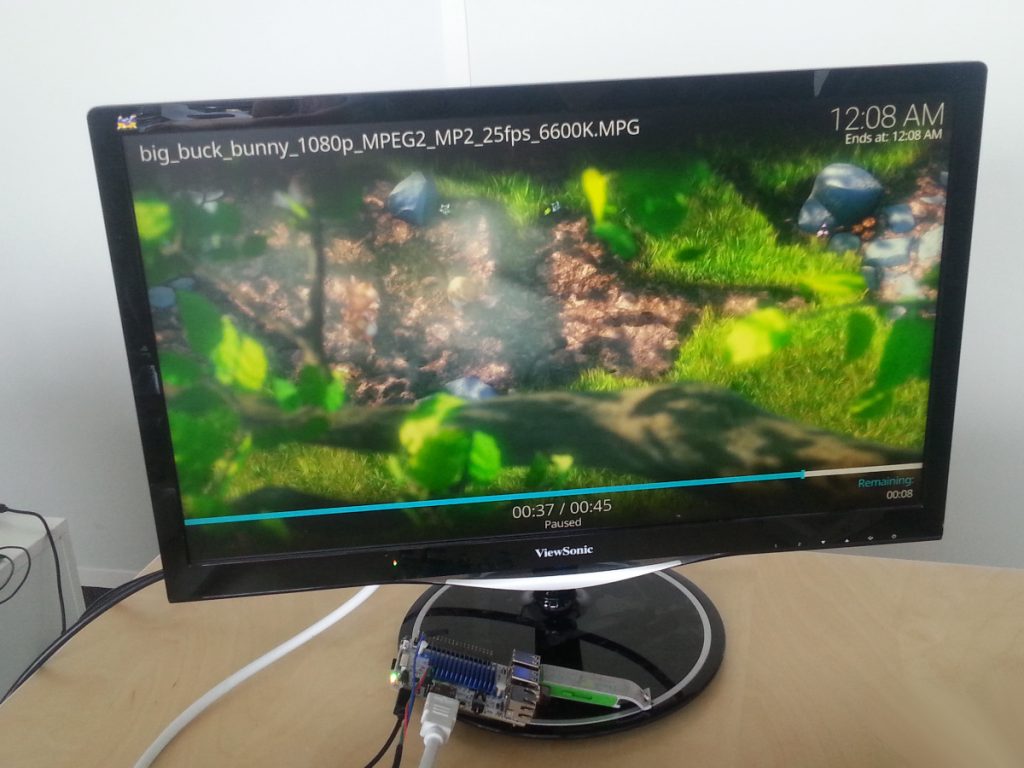

H264 support (for the baseline profile) was also merged in our cedrus kernel tree, as well as cedrus-frame-test and libva-cedrus master branches. With that, it was possible to cook a LibreELEC build with support for our updated libva-cedrus, that allows decoding H264 videos! The result is presented in the video below, that runs on an A33 and shows Sintel, an animation movie made with free software by the Blender Foundation and released under the Creative Commons Attribution 3.0 Generic license:

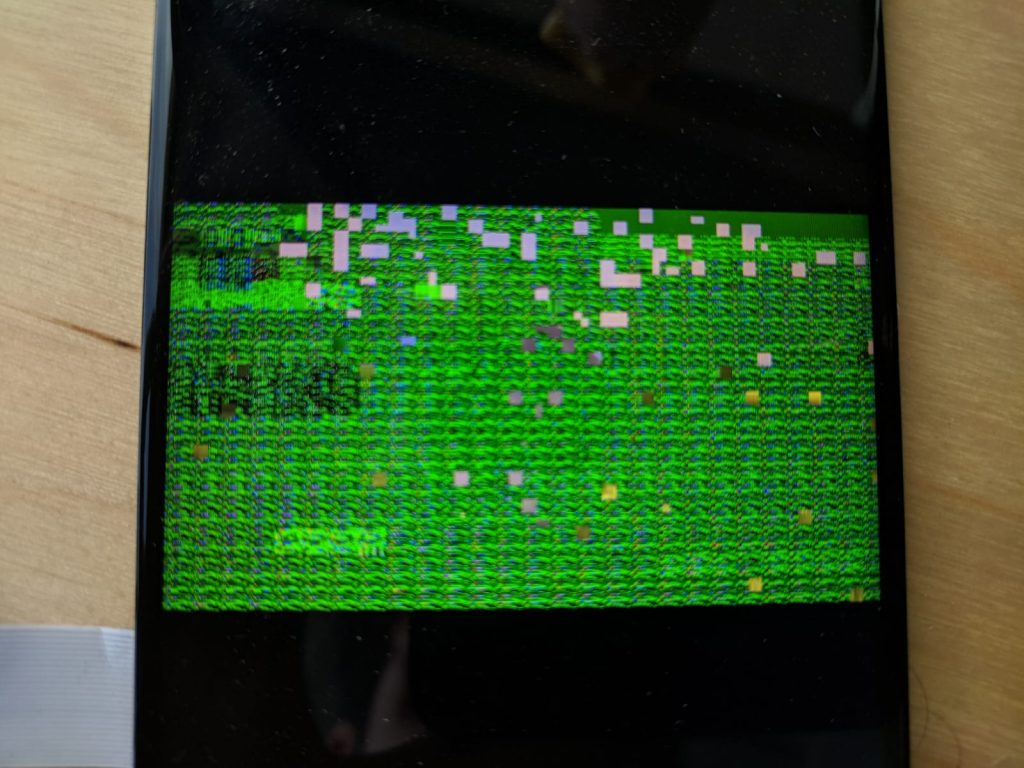

We also spent some time figuring out the reason for the various artifacts found on the A20 when using the display scaler. It turned out to be some missing register, and one register where the value documented would be offset by one, resulting in the last line of the picture repeating itself.

Once done, we switched to working on the issue we mentionned last week with H264. After testing a few ideas, we now have the H264 high profile working with libva-dump and cedrus-frame-test. The next step will be to port the new code to handle the reference frames to libva-cedrus, and hopefully we will be able to have this in our usual players.