Integration with video players

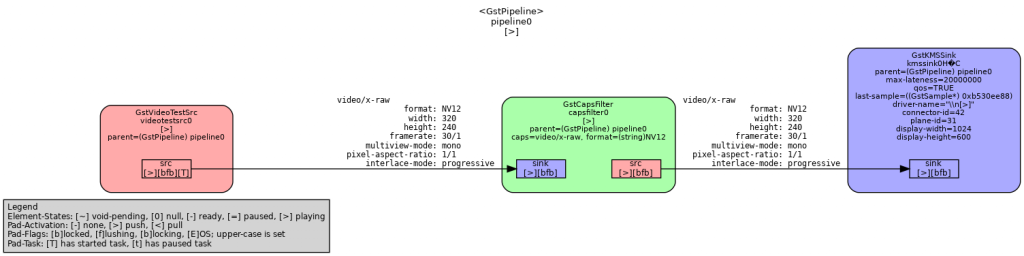

The work conducted this week on the video output side was focused on writing a shader for untiling the MB32 NV12-based format used by the VPU to output frames. This brought various challenges, some of which are presented below.

Since GLES and EGL are generic APIs that are not tied to a particular driver implementation, it made sense to start writing the shader on an x86 Intel-based device with GPU support in Mesa 3D (and speed-up the development time). The first step to the process was to display the raw pixel values from the luminance plane through the shader. Actually, two shaders are required: one for the vertex processor and one for the fragment (pixel) processor of the GPU. The former is in charge of applying geometrical operations to the vertices (the points that define the 3D mesh) while the latter defines the color for each rendered pixel from that mesh. In our case, the mesh is simply a rectangle that matches our window size. The tiled NV12 luminance plane is uploaded to the GPU as a 1-byte-per-pixel texture, which allows addressing each component separately. However, the coordinates for the texture are normalized by the GPU, so coordinates to retrieve texels (texture pixels) form the texture sampler are specified as decimal values. This makes it tricky to ensure that the right value is retrieved, especially given that the GPU might apply various filtering techniques (that are a really good thing to have when dealing with actual textures for 3D models, though).

Setting up the vertex and fragment shaders to linearly display the pixels from the tiled format results in a mangled display (as expected):

Thanks to the documentation made available by the linux-sunxi community, it was possible to rapidly draft a formula for getting the right texel location, that produced mitigated results:

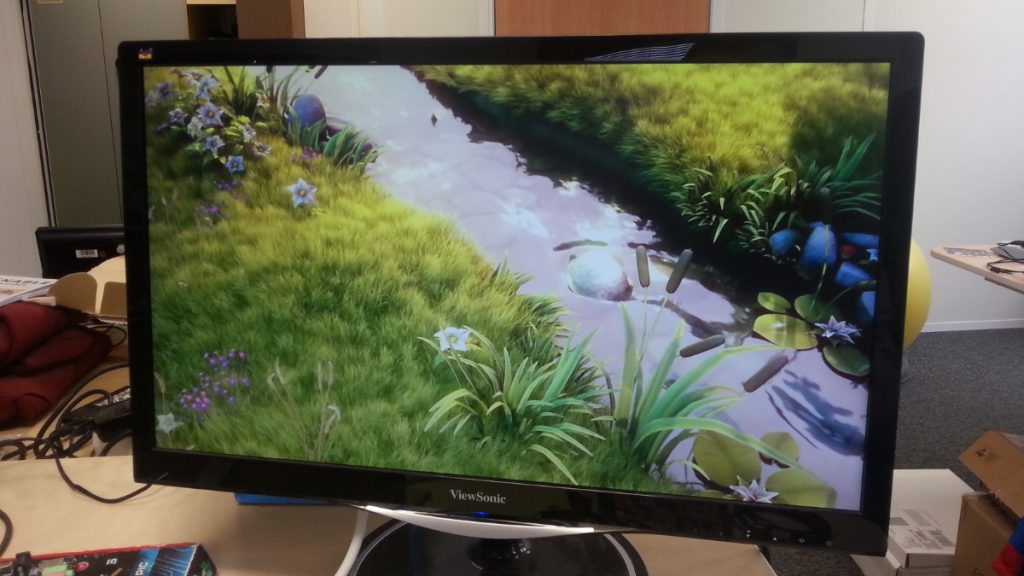

With some extra work (and quirks for ensuring that the right texel is picked on tile edges), the luminance component was finally displayed correctly:

Next up was the chrominance component, that required importing a second dedicated texture. First tries lead to funky-looking coloring of the frame:

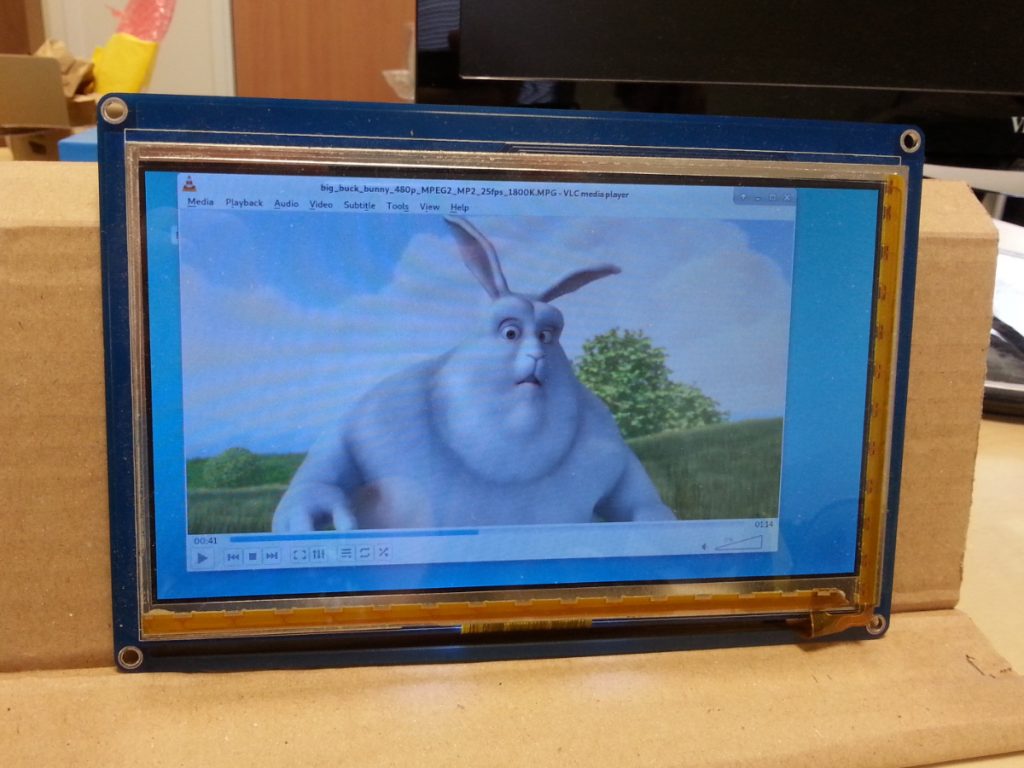

Until the shader was corrected to end up with a good-looking picture:

Real trouble began when porting this work to the Mali, that does not behave the same when it comes to texture uploads (and requires line-by-line upload for 1-byte-per-pixel formats). Since we are aiming at DMAbuf import instead of (slow) texture upload, no time was spent coping with the difference. The main issue with DMAbuf import is that the usual one-byte-per-pixel format (described by the DRM_FORMAT_R8 fourcc code) is simply not supported by the GPU, leaving only RGB and YUV as options, that do not directly fit the bill. We are still investigating ways to make our texture available to the GPU’s texture sampler without extra copies (or with copies that can fit our bill in terms of performance).

H264 support

We also worked on the H264 decoding in the kernel, and some progress was made. The libva-dump and cedrus-frame-test ports are now done, and we’ve been able to run cedrus-frame-test on 32 frames without any hiccups… Unfortunately, while the VPU reports the frames as properly decoded, the contents of the output buffer is blank, which is obviously not great. Since then, we have simplified the test to have a single frame decoded, and compared the register write sequence between libvdpau-sunxi and our kernel code. This has allowed us to find some bugs in the driver, but the current state is still that we can’t decode a frame. We shouldn’t be very far now though, so stay tuned for our next status update!