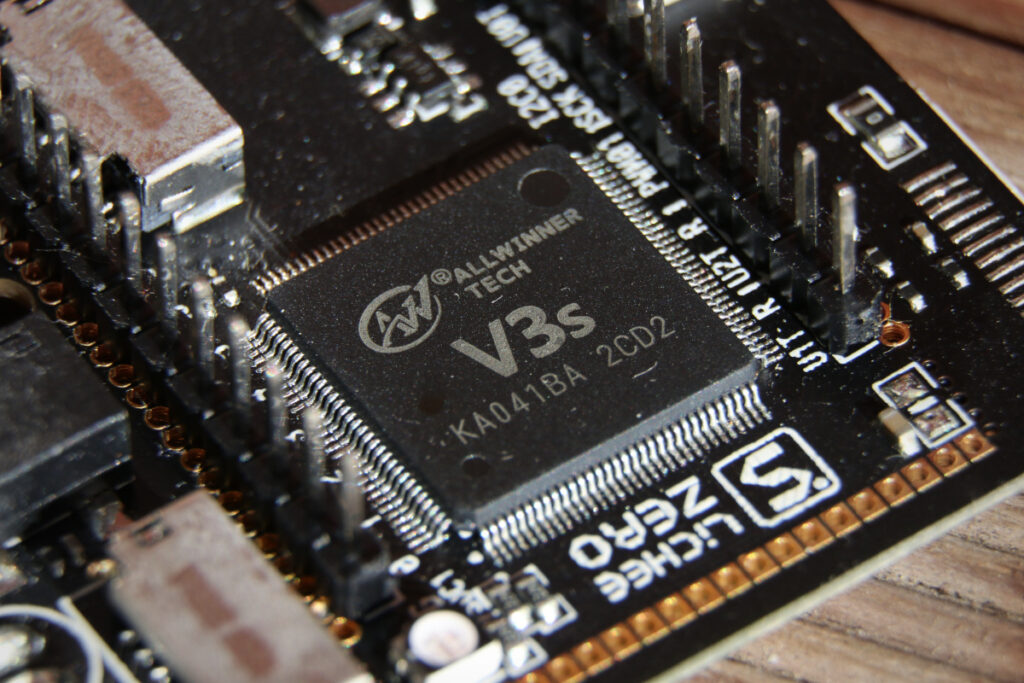

Bootlin has been involved with improving multimedia support on Allwinner platforms in the upstream Linux kernel for many years. This includes notable contributions such as hardware-accelerated video decoding initial support for MPEG-2, H.264 and H.265 following the successful crowd-funding campaign in 2018, support for the MIPI CSI-2 camera interface and early support for the Allwinner V3/V3s/S3 Image Signal Processor (ISP) in mainline Linux.

In addition to this work focused on Allwinner platform, we have also developed and released in 2021 initial Linux kernel support for the Hantro H1 H.264 stateless video encoder, used on Rockchip processors, on top of the mainline verisilicon/hantro driver.

Today Bootlin is happy to announce the release of Linux kernel support for H.264 video encoding with the Allwinner V3/V3s/S3 platforms, in the form of a series of patches on top of the mainline Linux cedrus driver (which already supports decoding) and a dedicated userspace test tool. The code is available in the following repositories:

- GitHub: bootlin/linux (

cedrus/h264-encodingbranch) - GitHub: bootlin/v4l2-cedrus-enc-test

This work is both the result of our internal research and a continuation of earlier projects that were carried out by members of the linux-sunxi community to document and implement early proofs of concepts for H.264 encoding, covering older platforms such as the A20 and H3. We would like to give a warm thank-you for these previous contributions that made life significantly easier for us.

Adding support for H.264 encoding required bringing a significant architecture rework to the driver, which was rather messy and not very well structured (but it was of course quite difficult for us to find the most elegant way of writing the driver back in 2018, when we were just getting start on the topic). With a clear organization and the adequate abstraction in place, it became much easier to add encoding support and focus on the hardware-specific aspects.

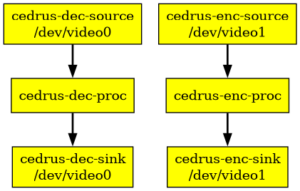

Just like decoding, encoding is based on the V4L2 M2M framework and shares the same V4L2 and M2M devices with decoding (meaning that only a single job of either decode or encode can be processed at a time). Two video device nodes are exposed to userspace, allowing as many decoding and encoding contexts as needed to exist simultaneously. Advanced H.264 codec features such as CABAC entropy coding and P frames are supported.

However this work is not yet suitable for inclusion in the mainline Linux kernel since there is no well-defined userspace API (uAPI) for exposing stateless encoders. We have started discussions to converge towards an agreeable proposal that would be both generic enough to avoid device-specific considerations in userspace while also benefiting from all the features and specificities that stateless encoders can provide. While discussions are still in progress, others have expressed interest in the same topic for the VP8 codec. Our previous work published in 2021 for the Hantro H1 H.264 stateless video encoder is also not upstream yet for the same reason: the need for a well-defined userspace API to expose stateless encoders.

Besides the introduction of a relevant new uAPI, a number of challenges remain in the path towards full support for Allwinner H.264 video encoding in mainline Linux:

- The rework of the driver needs to be submitted and merged upstream;

- Rate-control is currently not implemented and only direct QP controls are available;

- This work only covers the Allwinner V3/V3s/S3 platforms, while most other generations also feature different (yet rather similar) H.264 encoder units that could also be supported with some dedicated effort;

- Pre-processing features such as scaling and pixel format conversion are not yet support;

- Developing userspace library support (such as FFmpeg or GStreamer) to make use of the stateless encoder uAPI would be necessary;

If you are interested in funding the effort to help us advance any of these topics, feel free to get in touch with us to start the discussion!

Can’t wait for encoding support for the Pinephone!

There is still a long way ahead but we are getting closer!

Thanks for the awesome work Paul!

Looking forward to FFmpeg support, thanks for your work!

That would be the end-game yes! But first we need to agree on a uAPI and get this work merged upstream before it really makes sense to start significant userspace work.

Hello.

Does this cedrus encoder support V4L2_MEMORY_DMABUF for ‘output_buffer’?

I have exported buffers using VIDIOC_EXPBUF allocated from v4l2 camera, when I queueing DMABUF for ‘output_buffer’ using this guide: https://docs.kernel.org/userspace-api/media/v4l/dmabuf.html

I get:

VIDIOC_QBUF failed: Bad address

At the time I cany only encode using memcpy() (which is cpu hungry).

Hello,

Is there any implementation of JPEG encoder?

Do you know if is it difficult to implement it based on the H264 encoder?

As far as I’m aware, there is no implementation in the upstream Linux kernel, for the JPEG encoder with the Allwinner VPU. Feel free to contact us if you’re interested in getting commercial support to develop this feature.

Hello,

I am interested in developing a solution to send video images in real time, using a NanoPi Duo2 development board. I am currently using this board with Dietpi (Linux version 4.14.52 (root@wwd) (gcc version 4.9.3) + gstreamer and server services from ngrok.com.

The problem is that I use video encoding software at low resolution (720px480 with only 20fps) and with a very high consumption of resources (consumed energy and CPU). At the moment, if I increase the resolution, I only get 2-4 fps maximum.

The nanopi duo2 board supports a 5mpx video camera. I would be interested in the development of a solution to be able to transmit video images in real time at a higher resolution of 1920×1080 with a 15-20fps and with a lower consumption of resources.

I am waiting for an answer: tech.romaio@gmail.com