Recently, one of our customers designing an embedded Linux system with specific audio needs had a use case where they had a sound card with more than one audio channel, and they needed to separate individual channels so that they can be used by different applications. This is a fairly common use case, we would like to share in this blog post how we achieved this, for both input and output audio channels.

Recently, one of our customers designing an embedded Linux system with specific audio needs had a use case where they had a sound card with more than one audio channel, and they needed to separate individual channels so that they can be used by different applications. This is a fairly common use case, we would like to share in this blog post how we achieved this, for both input and output audio channels.

The most common use case would be separating a 4 or 8-channel sound card in multiple stereo PCM devices. For this, alsa-lib, the userspace API interface to the ALSA drivers, provides PCM plugins. Those plugins are configured through configuration files that are usually known to be /etc/asound.conf or $(HOME)/.asoundrc. However, through the configuration of /usr/share/alsa/alsa.conf, it is also possible, and in fact recommended to use a card-specific configuration, named /usr/share/alsa/cards/<card_name>.conf.

The syntax of this configuration is documented in the alsa-lib configuration documentation, and the most interesting part of the documentation for our purpose is the pcm plugin documentation.

Audio inputs

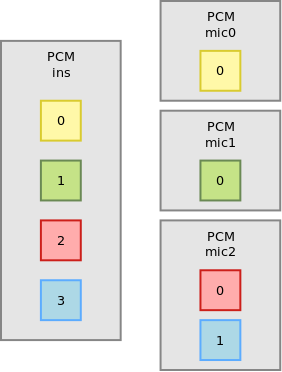

For example, let’s say we have a 4-channel input sound card, which we want to split in 2 mono inputs and one stereo input, as follows:

In the ALSA configuration file, we start by defining the input pcm:

pcm_slave.ins {

pcm "hw:0,1"

rate 44100

channels 4

}

pcm "hw:0,1" refers to the the second subdevice of the first sound card present in the system. In our case, this is the capture device. rate and channels specify the parameters of the stream we want to set up for the device. It is not strictly necessary but this allows to enable automatic sample rate or size conversion if this is desired.

Then we can split the inputs:

pcm.mic0 {

type dsnoop

ipc_key 12342

slave ins

bindings.0 0

}

pcm.mic1 {

type plug

slave.pcm {

type dsnoop

ipc_key 12342

slave ins

bindings.0 1

}

}

pcm.mic2 {

type dsnoop

ipc_key 12342

slave ins

bindings.0 2

bindings.1 3

}

mic0 is of type dsnoop, this is the plugin splitting capture PCMs. The ipc_key is an integer that has to be unique: it is used internally to share buffers. slave indicates the underlying PCM that will be split, it refers to the PCM device we have defined before, with the name ins. Finally, bindings is an array mapping the PCM channels to its slave channels. This is why mic0 and mic1, which are mono inputs, both only use bindings.0, while mic2 being stereo has both bindings.0 and bindings.1. Overall, mic0 will have channel 0 of our input PCM, mic1 will have channel 1 of our input PCM, and mic2 will have channels 2 and 3 of our input PCM.

The final interesting thing in this example is the difference between mic0 and mic1. While mic0 and mic2 will not do any conversion on their stream and pass it as is to the slave pcm, mic1 is using the automatic conversion plugin, plug. So whatever type of stream will be requested by the application, what is provided by the sound card will be converted to the correct format and rate. This conversion is done in software and so runs on the CPU, which is usually something that should be avoided on an embedded system.

Also, note that the channel splitting happens at the dsnoop level. Doing it at an upper level would mean that the 4 channels would be copied before being split. For example the following configuration would be a mistake:

pcm.dsnoop {

type dsnoop

ipc_key 512

slave {

pcm "hw:0,0"

rate 44100

}

}

pcm.mic0 {

type plug

slave dsnoop

ttable.0.0 1

}

pcm.mic1 {

type plug

slave dsnoop

ttable.0.1 1

}

Audio outputs

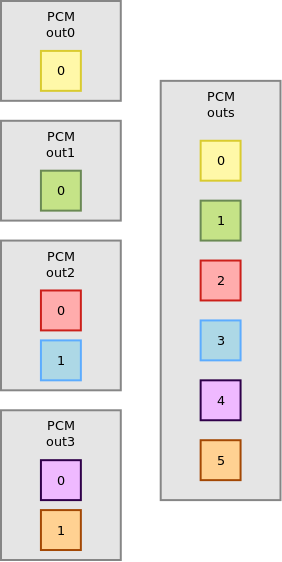

For this example, let’s say we have a 6-channel output that we want to split in 2 mono outputs and 2 stereo outputs:

As before, let’s define the slave PCM for convenience:

pcm_slave.outs {

pcm "hw:0,0"

rate 44100

channels 6

}

Now, for the split:

pcm.out0 {

type dshare

ipc_key 4242

slave outs

bindings.0 0

}

pcm.out1 {

type plug

slave.pcm {

type dshare

ipc_key 4242

slave outs

bindings.0 1

}

}

pcm.out2 {

type dshare

ipc_key 4242

slave outs

bindings.0 2

bindings.1 3

}

pcm.out3 {

type dmix

ipc_key 4242

slave outs

bindings.0 4

bindings.1 5

}

out0 is of type dshare. While usually dmix is presented as the reverse of dsnoop, dshare is more efficient as it simply gives exclusive access to channels instead of potentially software mixing multiple streams into one. Again, the difference can be significant in terms of CPU utilization in the embedded space. Then, nothing new compared to the audio input example before:

out1is allowing sample format and rate conversionout2is stereoout3is stereo and allows multiple concurrent users that will be mixed together as it is of typedmix

A common mistake here would be to use the route plugin on top of dmix to split the streams: this would first transform the mono or stereo stream in 6-channel streams and then mix them all together. All these operations would be costly in CPU utilization while dshare is basically free.

Duplicating streams

Another common use case is trying to copy the same PCM stream to multiple outputs. For example, we have a mono stream, which we want to duplicate into a stereo stream, and then feed this stereo stream to specific channels of a hardware device. This can be achieved using the following configuration snippet:

pcm.out4 {

type route;

slave.pcm {

type dshare

ipc_key 4242

slave outs

bindings.0 0

bindings.1 5

}

ttable.0.0 1;

ttable.0.1 1;

}

The route plugin allows to duplicate the mono stream into a stereo stream, using the ttable property. Then, the dshare plugin is used to get the first channel of this stereo stream and send it to the hardware first channel (bindings.0 0), while sending the second channel of the stereo stream to the hardware sixth channel (bindings.1 5).

Conclusion

When properly used, the dsnoop, dshare and dmix plugins can be very efficient. In our case, simply rewriting the alsalib configuration on an i.MX6 based system with a 16-channel sound card dropped the CPU utilization from 97% to 1-3%, leaving plenty of CPU time to run further audio processing and other applications.

Good Day,

Thank you for your post it really helped me understand how to configure an 8 channel Input USB Audio Interface to expose each input channel as a separate virtual “device”.

I did not however fully understand the statement “channel splitting happens at the dsnoop level” and the example given.

In my usecase the device only allows access to the hw once. This would mean if I used “hw:0,0” notation I would only be able to run one “arecord” command. The second command would fail with an access error.

For this reason the bindings method did not work but the ttable method worked.

Is this a big concern or would I be okay?

This is fine but opening the same sound card multiple times implies mixing samples (there is no other choices) which will require some CPU processing.

Hi!

I am a composer. I exclusively write orchestral. This needs special care. In a nutshell, 2 channel stereo for orchestral rendition is a huge letdown. Recordings with live orchestras are done on 24-36 independent channels/tracks (analog tape) and sometimes much more due to digital technology (on tapeless digital recorders). Nevertheless, no matter how many recorded channels in the file, all of the tracks are condensed into 2 stereo channels during mixing sessions. On consumer equipment the sound usually is atrocious in high dynamic situations, ex. violins harsh in forte, thread-like in piano, full mixes’ chords lack transparency (one hears chords but not the component sounds), etc. Long story short, I am looking at linux software packages that have multichannel outputs for 5.1 and up, and audio equipment (AV receivers with better DA conversion, multichannel amplifiers, etc.) There already are multichannel DAWs (Digital Audio Workstations with large amounts of MIDI tracks) but I don’t know which of the current Linux operating systems have the best multichannel outputs, that is, at least 8 INDEPENDENT channels that will separately transmit orchestral compartments, i.e. high strings, low strings, high wood winds, low woodwinds and horns, trumpets, low brass, percussion, a total of eight tracks out to 8 channels and 8 (pre)amplifier inputs. The OS would be complete, that is, for a composer on deadline there simply is no time for programming and fishing for plugins, mods, technical artifices. I’d build the system and go ahead and write the music. This is what I am looking for. By far no offense, but no time for geeking here. Multichannel is already 11.2 for the cinema, 5.1 is ancient, 7.1 routine, but software doesn’t seem to keep pace, not by a long shot.

Thank you for reading, John.

Hello,

Any recent Linux distribution is able to handle an arbitrary number of input and output channels, provided the hardware exposes them properly. We have ben routinely working on 8 to 16 channel sound configuration for our customers. Regarding software, all the common tools support many channels. downmixing should not just happen.

Hi,

Great article! I too am using imx (6,7d,8) platforms for headless audio streamers but there’s always been one unresolved headache that is still causing problems.. It’s regarding a term called ‘convenience switching’ where multiple applications like MPD, Roon, Bluetooth, Airplay etc all need direct raw access to the same PCM but only one at a time. A bit like the rotary source select switch on an old audio amplifier. In use all applications are seen on the network but as the user connects and uses one ( i.e. Roon ) the other embedded music services must yield the PCM if they own it. Dmix and other audio mixers cannot be used as very high audio rates are expected hence using hw:x,x not plughw or anything else.

Any suggestions how the PCM output could be simple switched? I’ve tried Jack and various other mechanisms but that just added more complexity when dynamically switching music sources.

The only other way I considered is a custom version of the snd usb driver ( it’s only ever USB PCMs ) which creates 5 duplicate sound cards from the one physical PCM and using an ioctl of something switch the internals (buffers in/out ) in a rotary switch fashion depending on source request.

Cheers..

There is indeed no support for dynamically changing PCM routes in alsalib so I’d say the solution would be to either ensure only one of the app is opening the PCM at any time or installing a sound server. The popular options are indeed jack and pulseaudio but pipewire looks very very promising and I plan to use that exclusively from now on.

Last option would be to write your own alsa plugin to do that, this would certainly be cleaner the functionality in the kernel.

Hi there

Very interesting information. I am currently working on a 48-Channel recorder, based on an AES50 > USB Interface to Raspberry Pi 4B to 480GB SSD Kingston. Programming is ready, using SoX. In Python this looks like (snippet of the code which takes care of starting the recorder):

my_env = os.environ.copy()

my_env[‘AUDIODRIVER’] = ‘alsa’

my_env[‘AUDIODEV’] = ‘hw:KTUSB,0’

ledButton[“text”] = “Turn Recorder off”

filepath = “/media/pi/RECORDER1/Aufnahmen/”

filedt = datetime.datetime.now().strftime(“%Y%m%d-%H%M%S”)

filename = filepath + filedt + “_recording.caf”

subprocess.Popen([‘rec’, ‘-q’, ‘–endian’, ‘little’, ‘–buffer’, ‘4096000’, ‘-c’, ’48’, ‘-b’, ’24’, filename], env = my_env, shell = False, stdout=subprocess.PIPE)

We are recording in .caf files as we had trouble to use .wav for reason of the 4GB limit (over 4GB the header becomes corrupt, the duration is not reported correctly).

I am fighting with one issue and that is buffer-overrun. Seems to me that writing to disc sometimes suffers of other cpu-activity on the Pi. In a 90 minutes recording of 48 Channels we got around 10 dropouts. Filesize approx. 36 GB. I am looking for assistance here.

A second thought is to equip the recorder with a 7″ touch and have on this screen not only start/stop recorder, but also VU Meters for all channels. Here might come in your further developments of ALSA, where we might be able to route the incoming audio not only to the SoX rec-function, but also to the VU-Meter. We are in contact with peppymeter https://github.com/project-owner/PeppyMeter.doc/wiki to see if we can work together to build a 48 channel VU Meter. The current peppy-meter works only during play and is a 2-channel solution.

Would you be willing to help – conditions willing to speak about, as this might become a commercial product.

Regards, Rudolf

Hi Rudolf.

Concerning writing to disc, the following may help.

Consider increasing the RAM size substantially and creating a RAM disk. This is done with one simple command.

RAM disc acts like a HD, but exists in RAM only. It is volatile storage, very quick, and I expect writing to it places less load on CPU and other parts of the system.

Once you get the job done, simply copy the file from RAM disc to non-volatile storage like HDD or SSD.

Great article.

Splitting outputs was exactly what I was looking for!

There is a small error in your config though:

pcm_slave.outs {

pcm “hw:0,0”

rate 44800

channels 6

}

Rate 44800 does not exist, and this made things not work at first.

Changing it to 44100 (or 48000) solved the problem.

Thanks again.

Fixed, thank you!

Hello!

Thanks for the article. It really helped me to understand things about configuring ALSA devices (which seems a topic without introductory or easy-to-find documentation).

I’m working on a embedded system with a sound card of 8 channels that are filled by different chips, so this method will be useful for us. I would like to ask if you could explain how these new virtual devices can be exposed through a sound server like pulseaudio or pipewire. I’ve been trying to use “load-module module-alsa-source device=device_name” but that doesn’t work. Could you point me to the right direction?

Thanks again for the audio articles!

I would say that when using pipewire, you are better of doing the mixing and routing using pipewire rather than using alsalib. Indeed, pa and pw are working with full alsa devices, not with pcms. You have some (not so great) documentation here:

https://gitlab.freedesktop.org/pipewire/pipewire/-/wikis/Virtual-Devices#create-an-aggregate-sinksource

I’m definitely planning to write more about pipewire in the future.

Hi,

hope this is the right place to ask this. I have an issue on my embedded linux device where sound on the headphone that is only supposed to be played on the left channel is also played on the right channel (quieter) and vice versa. Could that be fixed by using dsnoop, dshare or dmix?

This seems to me that you have some crosstalk. If the stream you play has left and right well separated, this can be caused by a mismatch in the audio format between your CPU DAI and your CODEC DAI (for example left justified versus i2s). Else, the hardware is causing that.

Hello and thanks for the article. Hope you can help with my question.

I do not understand something.

I have simple USB audio dongle and “aplay -l” and “arecord -l” give the same output:

pi@box1:/home $ arecord -l

**** List of CAPTURE Hardware Devices ****

card 1: Device [USB Audio Device], device 0: USB Audio [USB Audio]

Subdevices: 1/1

Subdevice #0: subdevice #0

pi@box1:/home $ aplay -l

**** List of PLAYBACK Hardware Devices ****

card 1: Device [USB Audio Device], device 0: USB Audio [USB Audio]

Subdevices: 1/1

Subdevice #0: subdevice #0

pi@box1:/home $

It has mono capture and stereo playback !

My Linux software expects stereo mic. how would I convert this mic to stereo ?

Also when I say hw:1,0 – does it refer to playback device or capture device ?

How would I change channel bindings for capture device and leave them intact for playback device if they both are the same “hw:1,0” ?

P.S. I use only ALSA without X-server on Raspbian Lite OS.

Hey,

this is one of the best articles describing the configuration of ALSA! Thanks for your great work. However, I have some trouble understanding the binding option for the audio output.

It says

bindings.0 2

bindings.0 3

for out2. Could it be possible, that there is a typo in the config and it actually shall rather be

bindings.0 2

bindings.1 3

Same would apply for out3

Cheers

This has now been corrected

Still a great article after all these years, just one bracket too many:

pcm.out1 {

type plug { <- this one

Hello.

Thank you for this tutorial that is great 😀

I am going crazy trying to mix the 4 physical inputs of my Zoom H5 device in a mono channel and copy this to Loopback in order to record from Loopback device using Audio Apps.

This is my actual .asoundrc file:

—————————

pcm.ZoomH5{

type hw

card H5

device 0

subdevice 0

nonblock true}

ctl.ZoomH5{

type hw

card H5}

pcm.loopin {

type plug

slave.pcm “hw:Loopback,0,0”

}

pcm.loopout {

type plug

slave.pcm “hw:Loopback,1,0”

}

pcm.mixinputs {

type dsnoop

ipc_key 234884

slave {

pcm ZoomH5

channels 4

}

}

pcm.record_mono {

type plug

slave.pcm “mixinputs”

}

pcm.mixinputs_multi {

type route

slave.pcm {

type multi

slaves {

a.pcm record_mono

a.channels 1

b.pcm loopin

b.channels 1

}

bindings {

0 { slave a channel 0}

}

}

ttable.0.0 1

}

—————————

Then, If I record from device “record_mono” or “mixinputs_multi” using:

arecord -f dat -c 1 -D mixinputs_multi ./shared/foobar.wav

I get a stereo wav file with all the physical inputs mixed in a mono channel (in both L and R).

But when I try to record from Loopback device using:

arecord -f dat -c 1 -D “hw:Loopback,1,0” ./shared/foobar.wav

I get silence. The same from the device “hw:Loopback,0,0”.

Please, If you can help me to find what I am doing wrong, I would appreciate 😀

Thanks 🙂

Hi melodiante,

My first reaction whenever I see an alsa config is to draw it out. Yours look like this: https://bootlin.com/wp-content/uploads/2024/11/2024-11-05-bootlin-blog-loopback.jpg

I don’t get the intent. Here your arecord tries to capture from both your ZoomH5 HW and your loopback. Try (1) coming up with a diagram then (2) translating it into an alsa-lib config, it should be more sensible.

Side-note: your route bindings is odd. It has a single entry. I guess that means you do not send anything into your loopback, but anyway that is not what you want to do.

One thing ticks my awareness: you mention wanting to expose your stream to applications; that probably means you have a sound daemon on the target that could handle your usecase. Have you looked into that? PipeWire can do that without breaking a sweat. JACK as well. PulseAudio too I guess, but I have less experience.

Good luck,

Théo

Thank you very much for your effort. Sorry but I am newby and absolutely lost with the alsa configuration.

What I need is that raspi-looper (https://github.com/RandomVertebrate/raspi-looper) could take the audio from all the inputs of the Zoom H5 using the Loopback device. Then, It doesn’t matter which audio input I am playing from (the stereo mics, a bass on input 3 or guitar on input 4), the input to the looper will always be the sum of the 4 input channels.

To get this, I was making tests using arecord. Then, using “arecord -f dat -c 1 -D mixinputs ./shared/foobar.wav” I get what I want, but “mixinputs” is not a device I can select on raspi-looper.

Is because I wanted to copy the “mixinputs” stream on the first channel of Loopback,0,0 device and then using Loopback,1,0 like input device on raspi-looper.

Resuming: “mixinputs” does the mix of the 4 input channels of Zoom H5. Now I need to copy this streaming in a device which could be reachable by any audio app like raspi-looper.

This is my last .asoundrc, but still I cannot get anything that silence recording from “hw:loopback,1,0”

pcm.ZoomH5{

type hw

card H5

device 0

nonblock true}

ctl.ZoomH5{

type hw

card H5}

pcm.loopin {

type plug

slave.pcm “hw:Loopback,0,0”

}

pcm.loopout {

type plug

slave.pcm “hw:Loopback,1,0”

}

pcm.mixinputs {

type plug

slave.pcm {

type dsnoop

ipc_key 234884

slave {

pcm ZoomH5

channels 4

}

}

}

pcm.mixinputsdmixed {

type dmix

ipc_key 2348

slave.pcm mixinputs

}

# Use this to output to loopback

pcm.dmixerloop {

type plug

slave {

pcm loopin

channels 1

rate 48000

}

}

# Sends to the two dmix interfaces

pcm.quad {

type route

slave.pcm {

type multi

# Necessary to have both slaves be dmix; both as hw doesn’t give errors, but wouldn’t

slaves.a.pcm “mixinputs”

slaves.a.channels 1

slaves.b.pcm “dmixerloop”

slaves.b.channels 1

bindings {

0 { slave a; channel 0; }

1 { slave b; channel 0; }

}

}

ttable.0.0 1

}

———–

Thank you very much for your time 🙂

I don’t expect you run a custom embedded distro on your platform, meaning you probably already have an audio daemon running. You do not have to do everything from static alsa-lib config files. You’ll have to document yourself on how that audio server works! Even if you stick with the ALSA API (PortAudio also supports the JACK API), all audio servers can create virtual ALSA devices and therefore interface with your app.

Summary: look into what audio daemon your distro provides, and use that. Good luck!

Thank tou for sharing this article.

It’s very useful for me to split multiple channel from a device.

Thanks!!

Great article. One question:

pcm.out2 {

type dshare

ipc_key 4242

slave outs

bindings.0 2

bindings.0 3

}

In this config, indices for binding seems to be wrong. Should it not be bindings.0 2 and bindings.1 3 like below?

pcm.out2 {

type dshare

ipc_key 4242

slave outs

bindings.0 2

bindings.1 3

}

This has now been corrected

Hi,

I have a requirement similar to the one mentioned in the link below. I have also posted the same request on the ALSA forum. Could you please help me find a solution for this?

https://github.com/alsa-project/alsa-lib/issues/443

Based on what you need, you should have a look at Pipewire. ALSA itself will not solve your use-case.

I’m using MoOde audio player on a Raspberry Pi to play audio through two USB devices: headphones (card 3: Device_1) for the full spectrum and a subwoofer (card 0: Device) for low frequencies (20–100Hz) in a meditation lounge setup. The audio source is a NAS on a Mac. MoOde’s MPD only outputs to one device at a time, despite attempting to configure mpd.conf for multiple outputs. My goal is simultaneous playback: headphones in stereo, subwoofer in mono with low-pass filtering. I know that MoOde’s backend is using ASLA.. Is this something I can achieve with a similar approach described in this article?

Thanks

You can indeed do mixing inside alsa-lib as described in the “Duplicating streams” subsection. However, MPD’s stable version supports multiple ALSA outputs; that would be the lead I would look into first.

Ah thank you! This was super helpful. I was able to successfully configure two ASLA outputs using the MDP config file. However, I still am encountering the issue of need to apply a low-pass filter on the subwoofer (card 0). I tried applying the ladspa low-pass filter on the MPD config level, with no avail. I’m going to ask on the Moode forums about if my method was correct, but I did see other users on those forums report they are using ASLA asound.conf files to achieve this.

I gave it a go, but when I applied the refactored MPD config and the new asound.conf file, I no longer heard any sound coming from the woofer. So it’s either my MPD config or asla config. Mind reviewing my asla config I have going for possible errors? (I will add pretext info for relevancy):

MPD Config (for defining audio output devices):

audio_output {

type “alsa”

name “Headphones”

device “hw:3,0”

mixer_type “software”

enabled “yes”

}

audio_output {

type “alsa”

name “Subwoofer”

device “subwoofer_filtered”

mixer_type “software”

enabled “yes”

}

ASLA asound.conf:

pcm.subwoofer_filtered {

type ladspa

slave.pcm “plughw:0,0” # Subwoofer device

path “/usr/lib/ladspa”

plugins [

{

label “lowpass_iir”

input {

controls [ 100 ]

}

}

]

}

—

Note: I’ve confirmed that I’ve installed the ladspa plugin and set env path properly.

listplugins | grep lowpass:

/usr/lib/ladspa/lowpass_iir_1891.so:

Glame Lowpass Filter (1891/lowpass_iir)

echo $LADSPA_PATH:

/usr/lib/ladspa

Thanks

The alsa-lib documentation mentions the “id” property to pick which LADSPA plugin should be used, which you are not using.

Else, you could use MPD’s filtering ability on outputs, combined with a low-pass filter from FFMPEG.

Got it, this was super helpful. Much gratitude to you and your team!